Wasabi supports the AWS S3 API multipart upload capability to segment a large file into separate parts that are automatically reassembled as a single file when the file transmission is done. The benefit of this approach is that a large file can be uploaded more efficiently and, in the event of a file transmission failure, the storage application can resume uploading with the part that failed (rather than starting at the beginning).

For an explanation of how multipart upload works in the S3 API, review Uploading and copying objects using multipart upload. Wasabi adheres to the approach described in that document with the following exception.

Incomplete multipart uploads are automatically deleted after approximately 31 days to prevent clogging of your storage. As a result, Wasabi does not require any specific lifecycle policy to enable this functionality.

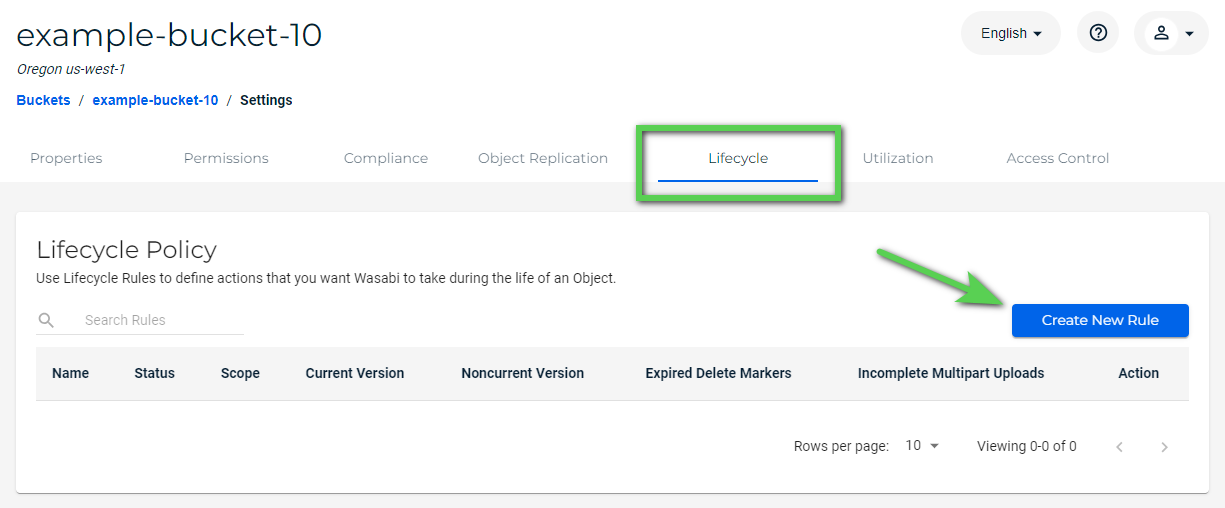

Wasabi supports the AbortIncompleteMultipartUpload lifecycle action to configure a lifecycle policy that will delete aborted multipart(s) after a specified time. You may configure this for any bucket by creating a Lifecycle Rule (Lifecycle tab of Settings for the bucket).

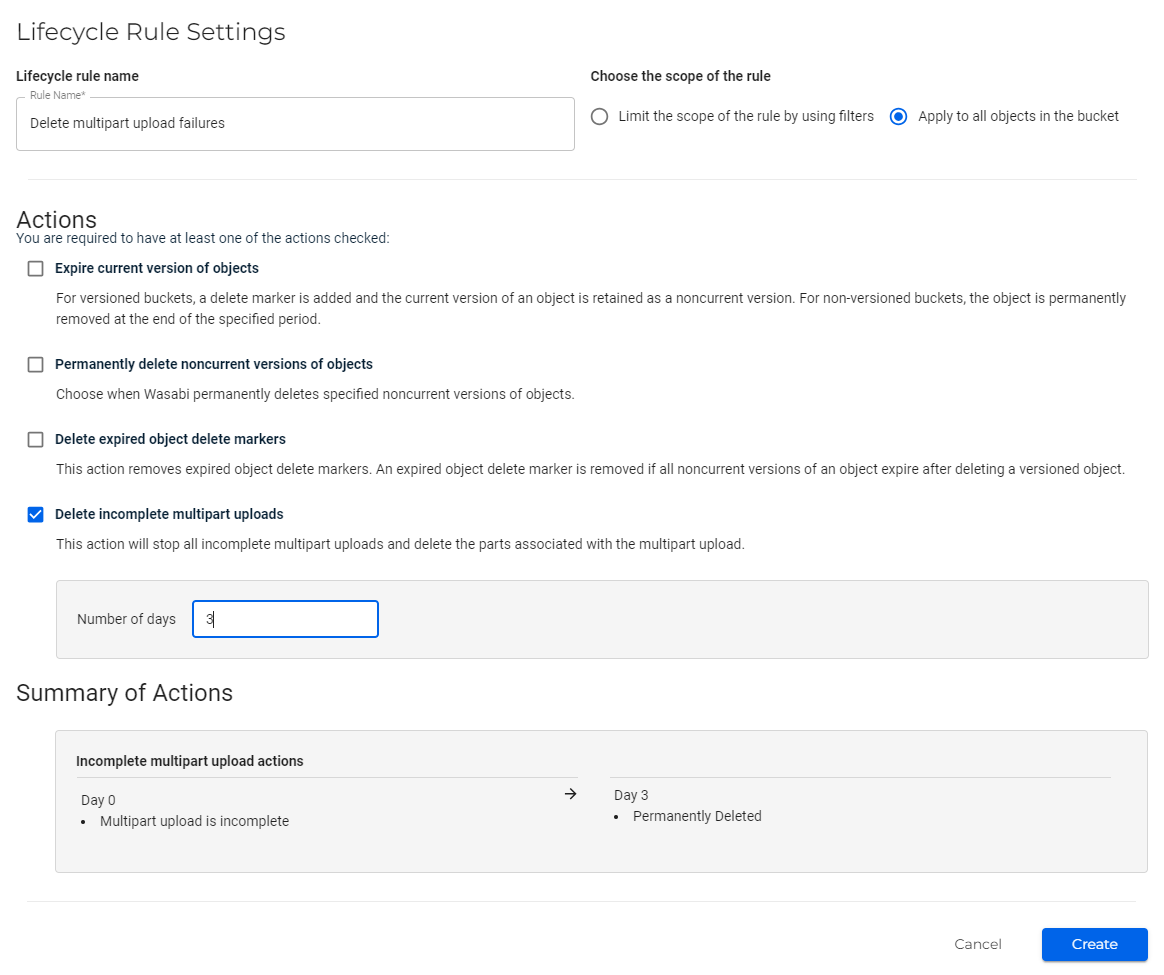

This example sets a deletion time of 3 days:

Aborted Multiparts

Aborted multiparts occur when a multipart upload begins but is not completed due to a planned event (the sending application intentionally stops the multipart upload) or unplanned event (the transmission of the part is unexpectedly interrupted). Wasabi, like AWS, bills for the storage associated with uncommitted multiparts (aborted or abandoned) as long as they are kept in the system as active storage (30 days).

An incomplete multipart object is charged as “active” storage for the first 30 days from creation. After 30 days, it is considered to be “deleted” storage based on the retention days set in the billing plan. For example:

If you have a 30-day minimum retention plan, uncommitted multiparts are charged only for active storage for 30 days. After that, you are not charged for deleted storage.

If you have a 90-day minimum retention plan, uncommitted multiparts are charged for active storage for 30 days. After 30 days, you are charged for deleted storage for the next 60 days.

Active Read with MultiPartUpload

To perform concurrent GET (download) operations while uploading files to Wasabi S3 using multipart uploads, you can follow the steps below. The examples use Python with Boto3, as it is a common choice for interacting with S3-compatible services.

With this setup, you can efficiently upload large files to Wasabi S3 using multipart uploads while simultaneously downloading other files. This method ensures that you make the most of your bandwidth and resources.

Set up your environment. To do so, install Boto3:

pip install boto3Configure your credentials and ensure you have your Wasabi access key and secret key ready.

Initialize/start your multipart upload. You need to initiate the multipart upload and get an upload ID.

import boto3 # Initialize S3 client s3 = boto3.client('s3', endpoint_url='https://s3.wasabisys.com', aws_access_key_id='YOUR_ACCESS_KEY', aws_secret_access_key='YOUR_SECRET_KEY') bucket_name = 'your-bucket-name' file_name = 'large_file.zip' multipart_upload = s3.create_multipart_upload(Bucket=bucket_name, Key=file_name) upload_id = multipart_upload['UploadId']Upload the file in parts. You can read the file in chunks and upload each part.

import os part_size = 5 * 1024 * 1024 # 5 MB parts = [] file_path = '/path/to/your/large_file.zip' with open(file_path, 'rb') as f: part_number = 1 while True: data = f.read(part_size) if not data: break part = s3.upload_part( Bucket=bucket_name, Key=file_name, PartNumber=part_number, UploadId=upload_id, Body=data ) parts.append({'ETag': part['ETag'], 'PartNumber': part_number}) part_number += 1 # Complete the multipart upload s3.complete_multipart_upload(Bucket=bucket_name, Key=file_name, UploadId=upload_id, MultipartUpload={'Parts': parts})Start downloading files concurrently. While the above upload is in progress, you can start downloading files. Use threading or asynchronous programming to allow concurrent operations. For example, to download a File in a separate thread:

import threading def download_file(): s3.download_file(bucket_name, file_name, '/path/to/downloaded_file.zip') # Start the download in a separate thread download_thread = threading.Thread(target=download_file) download_thread.start()Monitor upload progress. You can print progress messages or use a progress library to give feedback on the upload.

Join the thread. Ensure that the main thread waits for the download thread to finish if needed.

download_thread.join() print("Download completed!")

Additional Considerations

Error Handling: Implement error handling for both upload and download processes to retry on failures.

Adjust Part Size: The part size can be adjusted based on your network conditions and file size, but it must be between 5MB and 5GB for each part.

Cleanup: Ensure you clean up resources and handle any necessary checks for successful uploads and downloads.