Lifecycle policies are used on Wasabi to manage an object's retention period in your bucket. Wasabi supports the ability to expire objects in newer buckets using the Lifecycle option for your bucket Settings page (refer to Lifecycle Settings: Creating a Rule), or using the API.

If your bucket does not support Lifecycle policies, you can utilize a scripted approach, as described in this article. When an object reaches the end of its lifetime based on its retention period, the script will remove the expired object.

Data uploaded using S3 backup applications that use their own proprietary algorithms to compress, deduplicate, or create blocks in non-native format before uploading them to S3 storage must NOT be deleted manually or via any external (outside of the application) Lifecycle policy. Those S3 backup applications should trigger any kind of deletion to maintain the indexes and proper chain within the backup application databases.

Two Scripts for Two Different Use Cases

Delete ONLY the non-current (old versioned) objects through the Lifecycle policy.

Take x-days as user input to determine the retention time.

Check the last modified (all non-current objects) to determine if the objects are greater than x-days.

Delete the expired objects.

Use a cron job to execute the script automatically once per day, every day.

Delete BOTH the current and non-current (old versioned) objects through the Lifecycle policy.

Take x-days as user input to determine the retention time.

Check the last modified (all objects) to determine if the objects are greater than x-days.

Delete the expired objects.

Use a cron job to execute the script automatically once per day, every day.

Prerequisites

Make sure you have installed AWS SDK boto3 for Python on your CLI before running the script.

Install Python 3+ version to run this script.

Script Details

For this example, the first use case listed above (Delete ONLY the non-current (old versioned) objects through the Lifecycle policy) is used. Scripts for both use cases and instructions for setting up cron/scheduler are provided as links at the end of this article.

Enter the

aws_access_key_idandaws_secret_key_idfor your account in the script.Enter the value

delete_after_retention_daysaccording to your requirements.Enter your bucket name in the

bucketvariable and the corresponding endpoint for that bucket inendpoint.Note that this example uses Wasabi's us-east-1 storage region. Use any other appropriate Wasabi service URL.

Enter the prefix based on your requirements in the

prefixvariable. The script will only delete the expired object(s) based on the specified prefix. If you are specifying a prefix, be sure to enter the FULL PREFIX PATH (bucket name NOT included).

# Copyright (c) 2022. This script is available as fair use for users. This script can be used freely with Wasabi

# Technologies, LLC. Distributed by the support team at Wasabi Technologies, LLC.

from boto3 import client, Session

from botocore.exceptions import ClientError

from datetime import datetime, timezone

if __name__ == '__main__':

# Editable variables

# __

aws_access_key_id = ""

aws_secret_access_key = ""

delete_after_retention_days = 1 # number of days

bucket = ""

prefix = ""

endpoint = "" # Endpoint of bucket

# __

# get current date

today = datetime.now(timezone.utc)

try:

# create a connection to Wasabi

s3_client = client(

's3',

endpoint_url=endpoint,

aws_access_key_id=aws_access_key_id,

aws_secret_access_key=aws_secret_access_key)

except Exception as e:

raise e

try:

# list all the buckets under the account

list_buckets = s3_client.list_buckets()

except ClientError:

# invalid access keys

raise Exception("Invalid Access or Secret key")

# create a paginator for all objects.

object_response_paginator = s3_client.get_paginator('list_object_versions')

if len(prefix) > 0:

operation_parameters = {'Bucket': bucket,

'Prefix': prefix}

else:

operation_parameters = {'Bucket': bucket}

# instantiate temp variables.

delete_list = []

count_current = 0

count_non_current = 0

print("$ Paginating bucket " + bucket)

for object_response_itr in object_response_paginator.paginate(**operation_parameters):

if 'DeleteMarkers' in object_response_itr:

for delete_marker in object_response_itr['DeleteMarkers']:

if (today - delete_marker['LastModified']).days > delete_after_retention_days:

delete_list.append({'Key': delete_marker['Key'], 'VersionId': delete_marker['VersionId']})

if 'Versions' in object_response_itr:

for version in object_response_itr['Versions']:

if version["IsLatest"] is True:

count_current += 1

elif version["IsLatest"] is False:

count_non_current += 1

if version["IsLatest"] is False and (

today - version['LastModified']).days > delete_after_retention_days:

delete_list.append({'Key': version['Key'], 'VersionId': version['VersionId']})

# print objects count

print("-" * 20)

print("$ Before deleting objects")

print("$ current objects: " + str(count_current))

print("$ non-current objects: " + str(count_non_current))

print("-" * 20)

# delete objects 1000 at a time

print("$ Deleting objects from bucket " + bucket)

for i in range(0, len(delete_list), 1000):

response = s3_client.delete_objects(

Bucket=bucket,

Delete={

'Objects': delete_list[i:i + 1000],

'Quiet': True

}

)

print(response)

# reset counts

count_current = 0

count_non_current = 0

# paginate and recount

print("$ Paginating bucket " + bucket)

for object_response_itr in object_response_paginator.paginate(Bucket=bucket):

if 'Versions' in object_response_itr:

for version in object_response_itr['Versions']:

if version["IsLatest"] is True:

count_current += 1

elif version["IsLatest"] is False:

count_non_current += 1

# print objects count

print("-" * 20)

print("$ After deleting objects")

print("$ current objects: " + str(count_current))

print("$ non-current objects: " + str(count_non_current))

print("-" * 20)

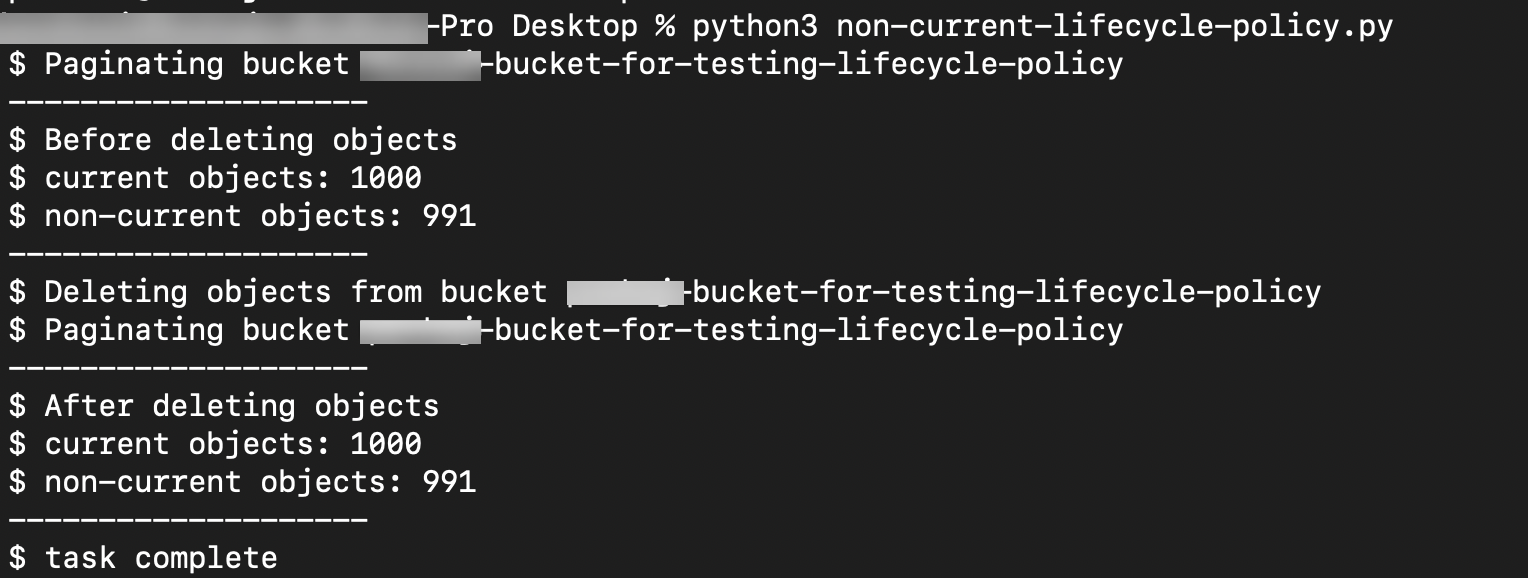

print("$ task complete")The output is:

You may set this script to run as a cron/scheduler and automatically run the script at the same time every day (for example, at 9:00 AM to clean up expired objects for your Wasabi buckets).