How do I use IBM Cloud Satellite with Wasabi?

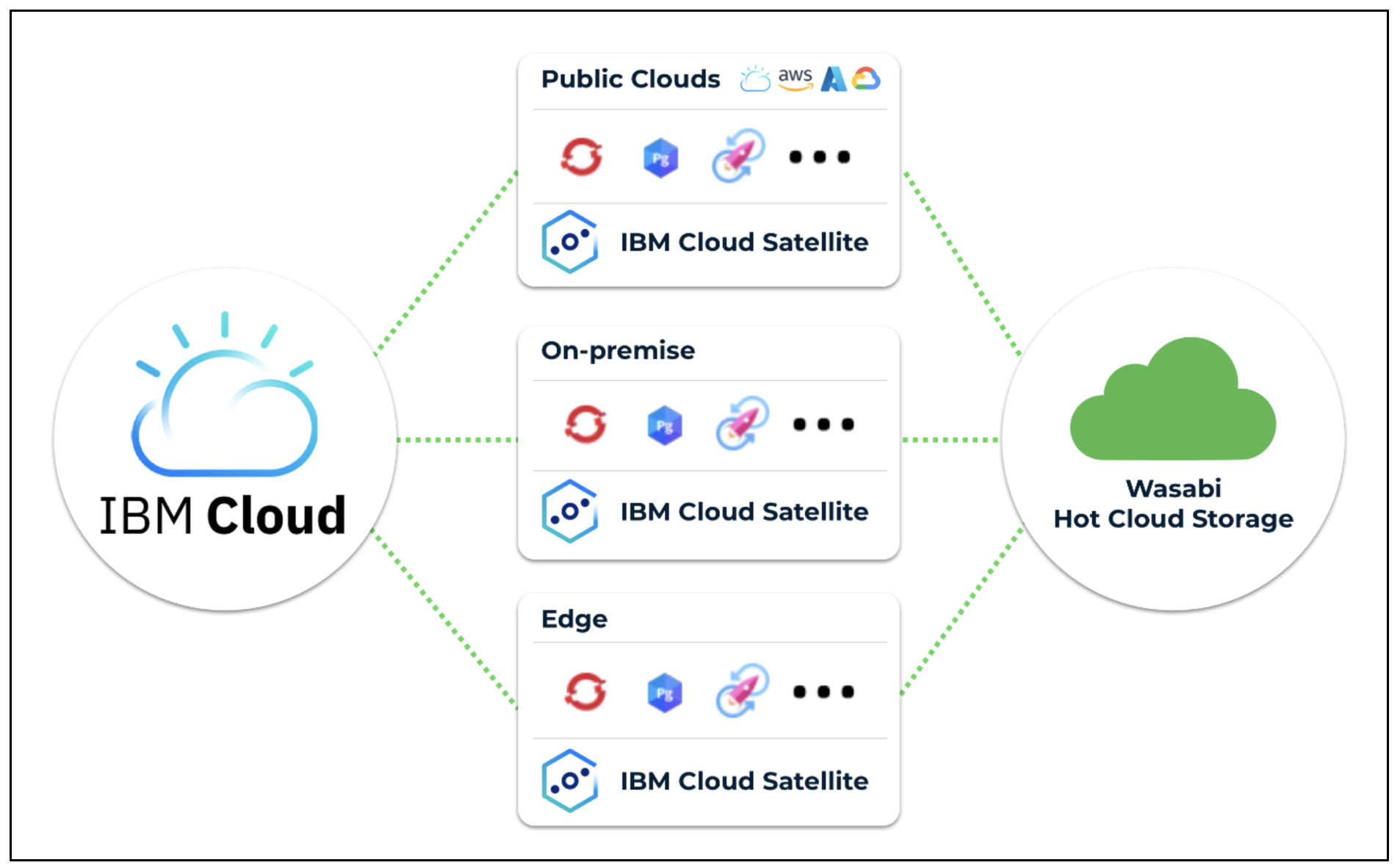

Wasabi has been validated for use with IBM Cloud Satellite. The IBM Cloud Satellite-managed distributed cloud solution delivers cloud services, APIs, access policies, security controls, and compliance. To use Wasabi Object Storage with workload running in IBM Cloud Satellite Locations, follow the instructions below.

Reference Architecture

Prerequisites

On the IBM Cloud side: An IBM Cloud account and a Satellite location created and configured.

On the Wasabi side: An active Wasabi account, at least one bucket, and an Access Key/Secret Key pair.

Integration Scenarios

There are multiple use cases for the IBM Cloud Satellite with Wasabi integration. This article outlines a few use cases. To learn more about Satellite use cases in general, refer to Satellite Use Cases.

Base Integration

Satellite Locations are mini cloud regions and, in most cases, do not need a direct integration to Wasabi cloud object storage. You can run any application workload that uses object storage and leverages the S3 API function unchanged as long as their API usage does not hardcode AWS (or IBM, MinIO, and so on.) endpoints.

Wasabi provides an S3-compatible object storage solution with defined API endpoints. Existing applications that utilize 'boto3', or any of the other S3-compatible API libraries, can supply the Wasabi endpoint and user credentials.

Below are examples of some products within IBM’s data portfolio that work OOTB (out of the box) with Wasabi.

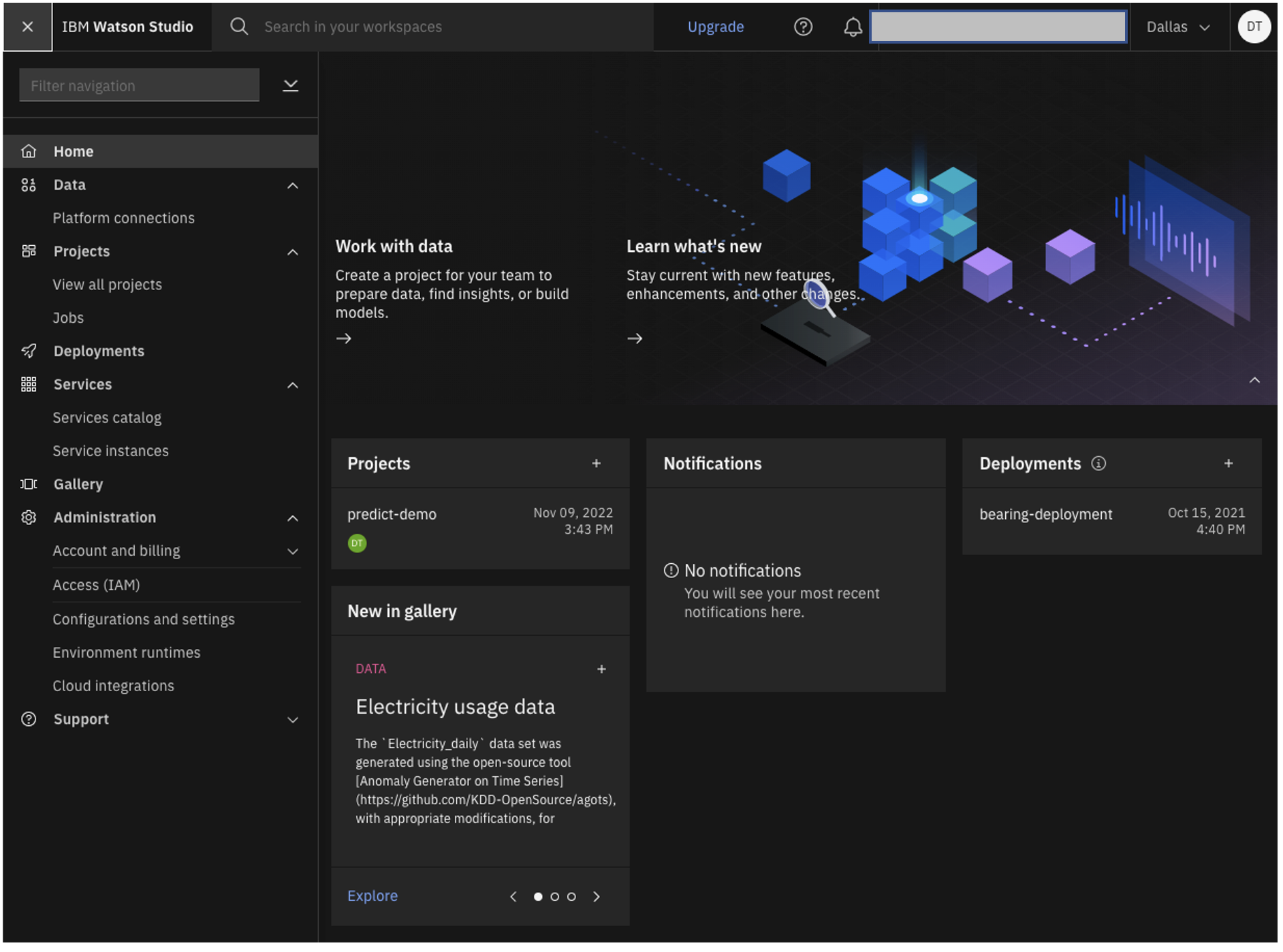

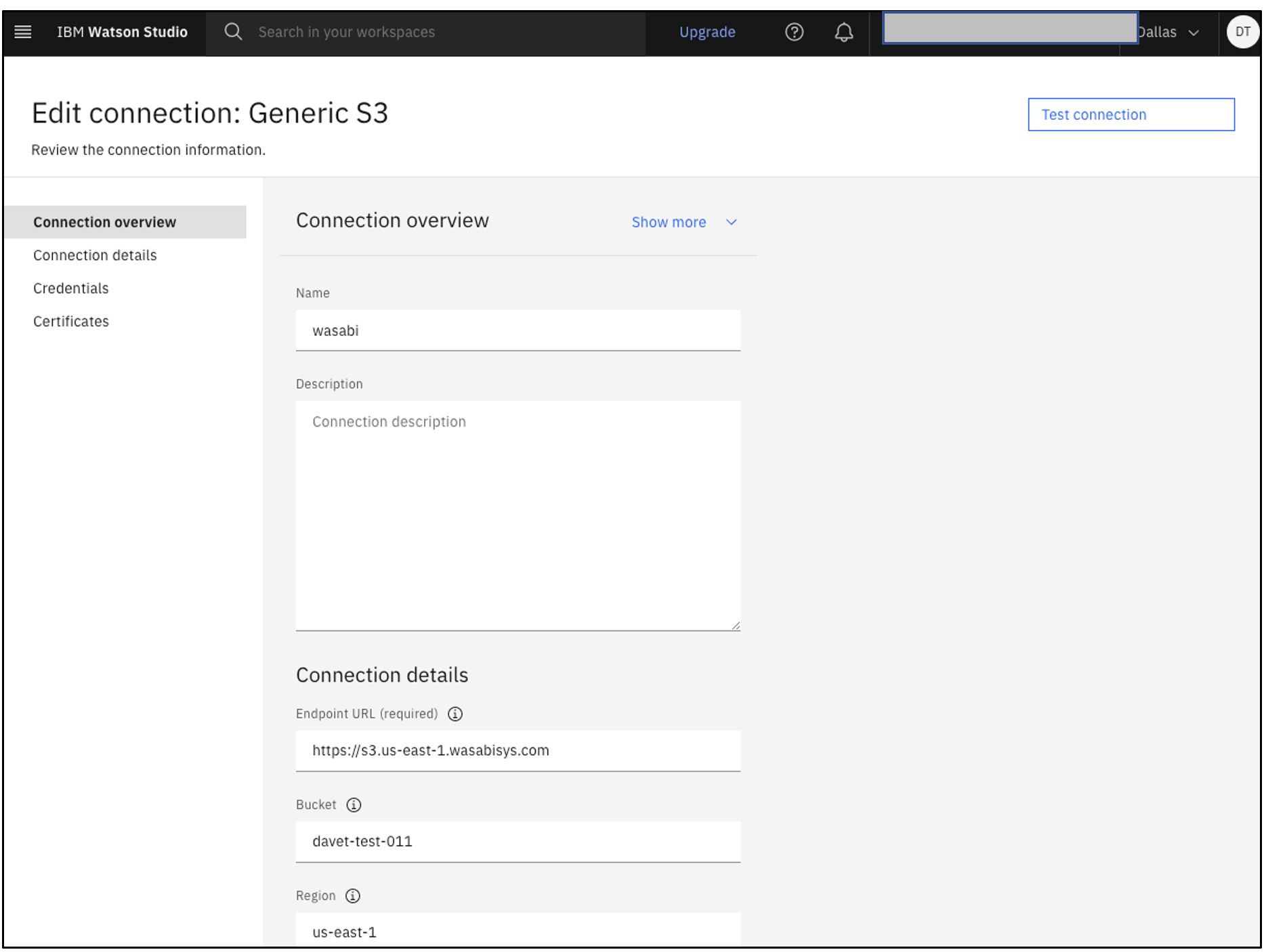

Running IBM Cloud Pak for Data and Watson Studio with Wasabi

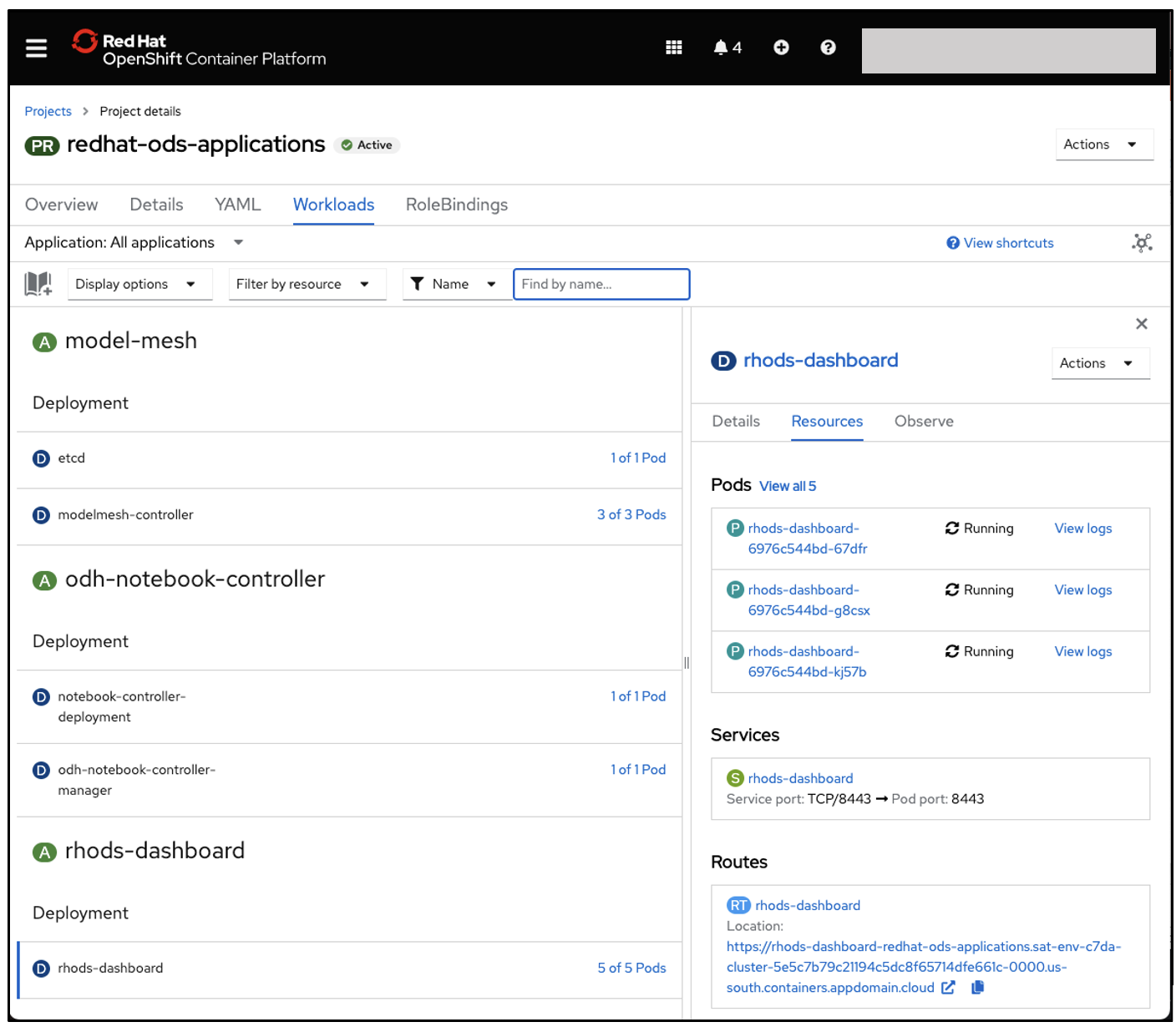

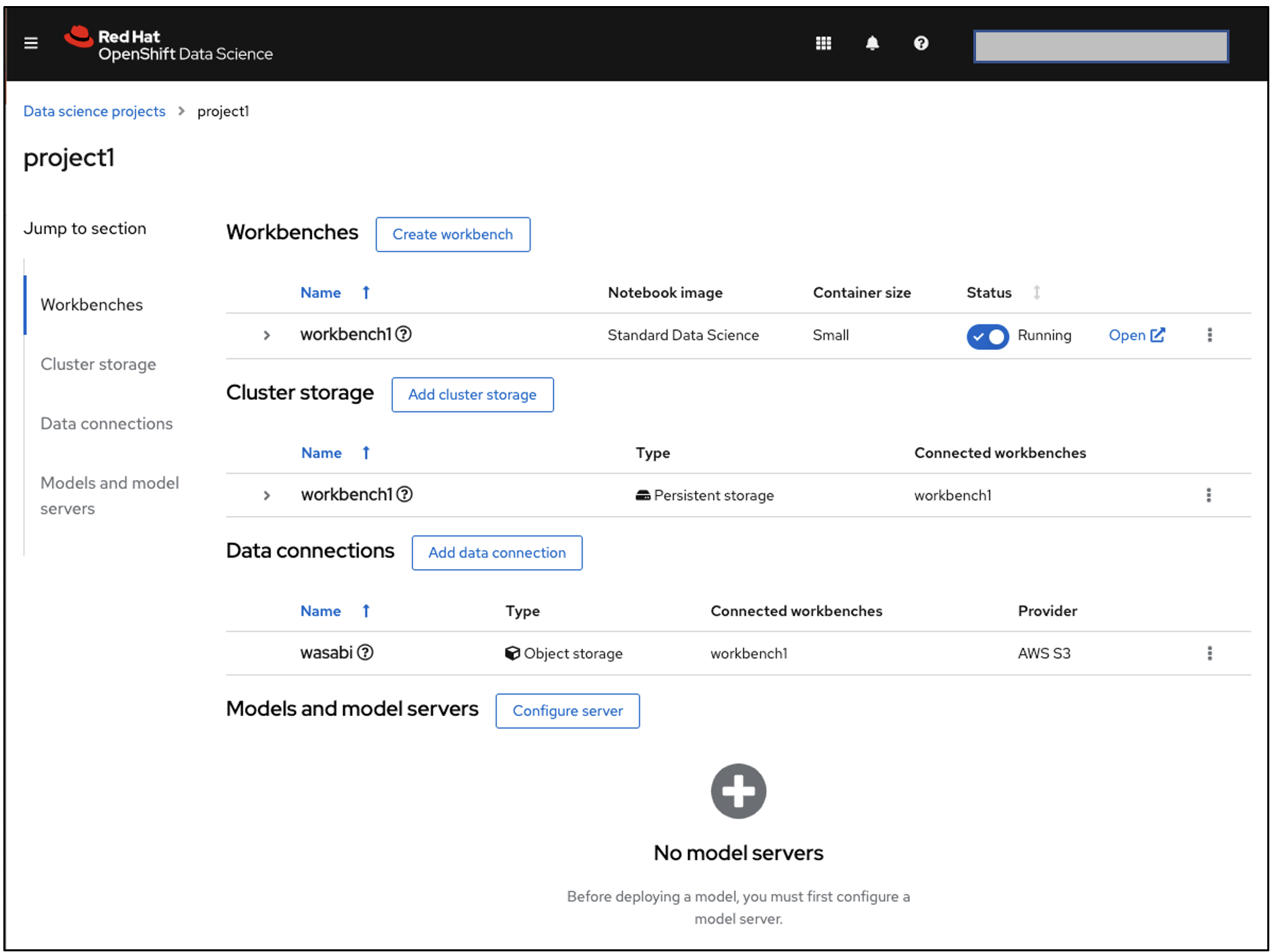

Running Red Hat Open Data Service on Satellite with a Wasabi data connection

Satellite Storage Templates

For workloads that depend on mounted filesystems that utilize object storage in the backend, you can use the IBM Object Storage plugin to define the Wasabi endpoints and bucket(s) to be mounted. Within the context of Satellite, there is a Satellite Storage Template to ease the use of the IBM Object Storage plugin.

With Satellite storage templates, you can create a storage configuration that can be deployed across your clusters without the need to re-create the configuration for each cluster. To learn more about the Satellite Storage Templates, review How do templates work?

To create a Satellite Storage Template:

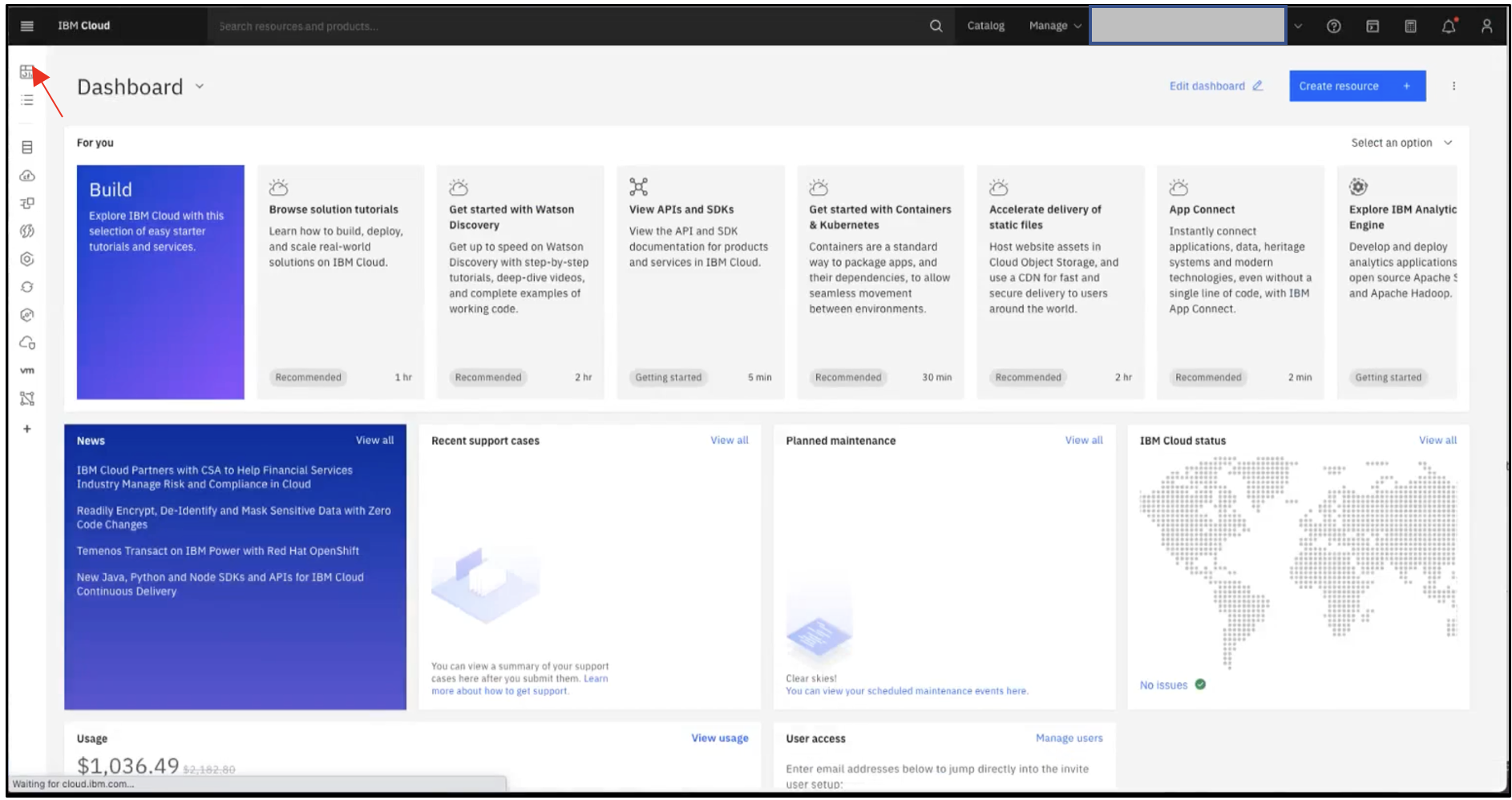

Log in to your IBM Cloud account and click the Dashboard icon.

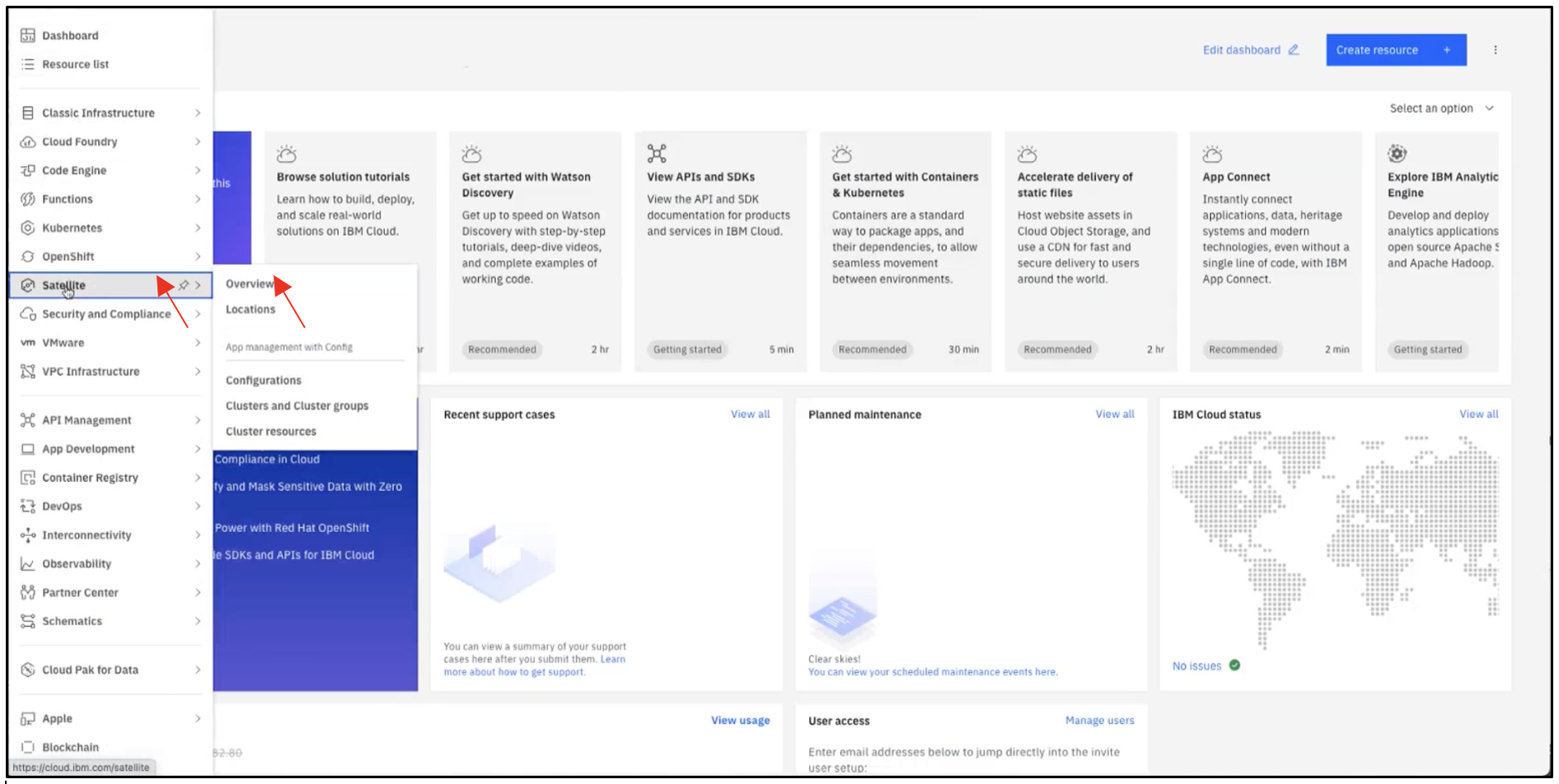

Click Satellite, then click Overview.

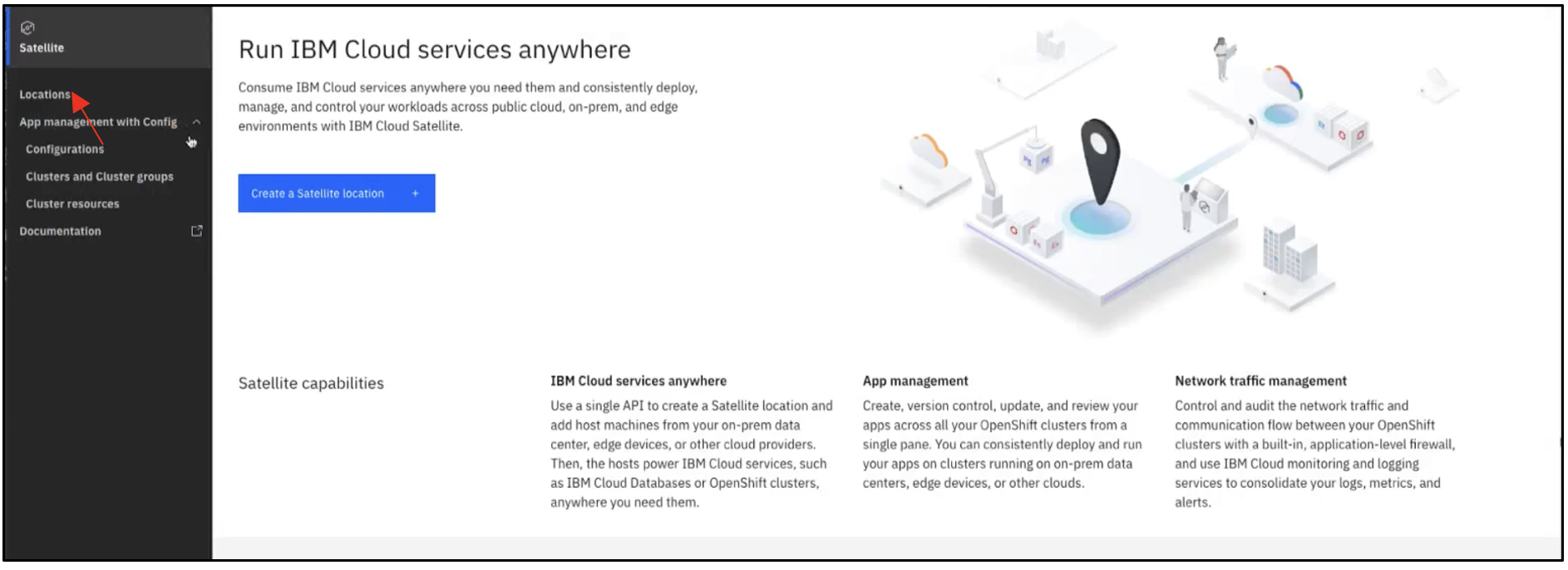

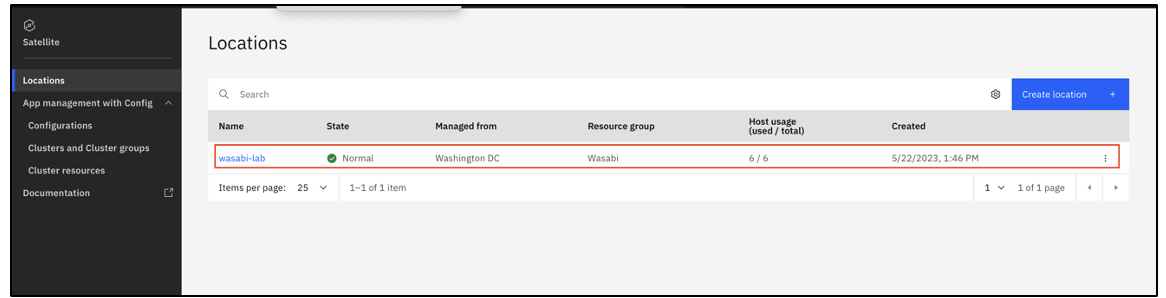

On the Satellite Overview page, click Locations.

On the Locations page, select the desired location.

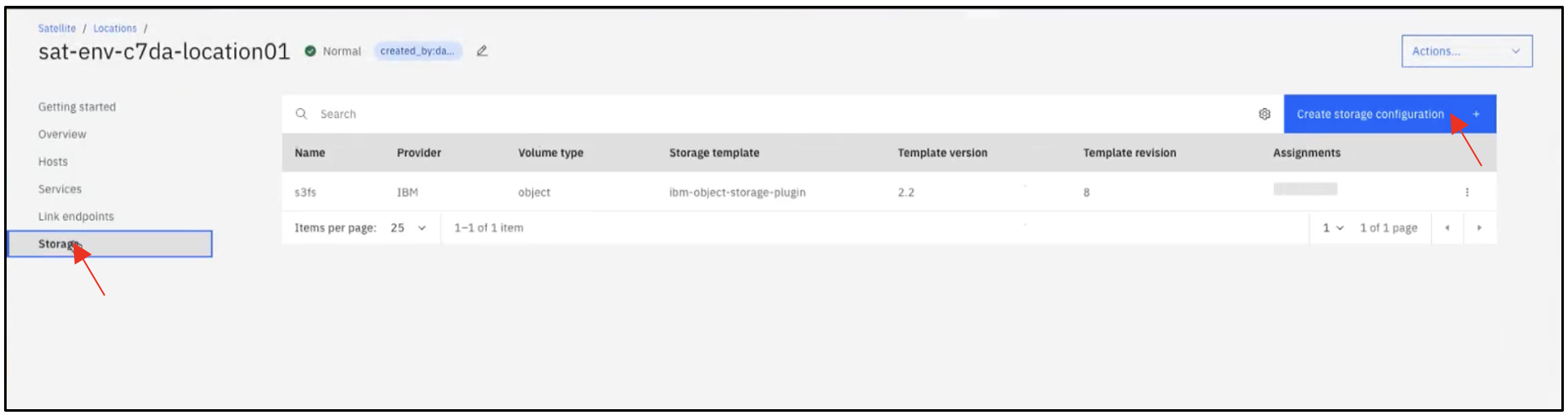

On the Location Configuration page, click Storage. Click Create storage configuration.

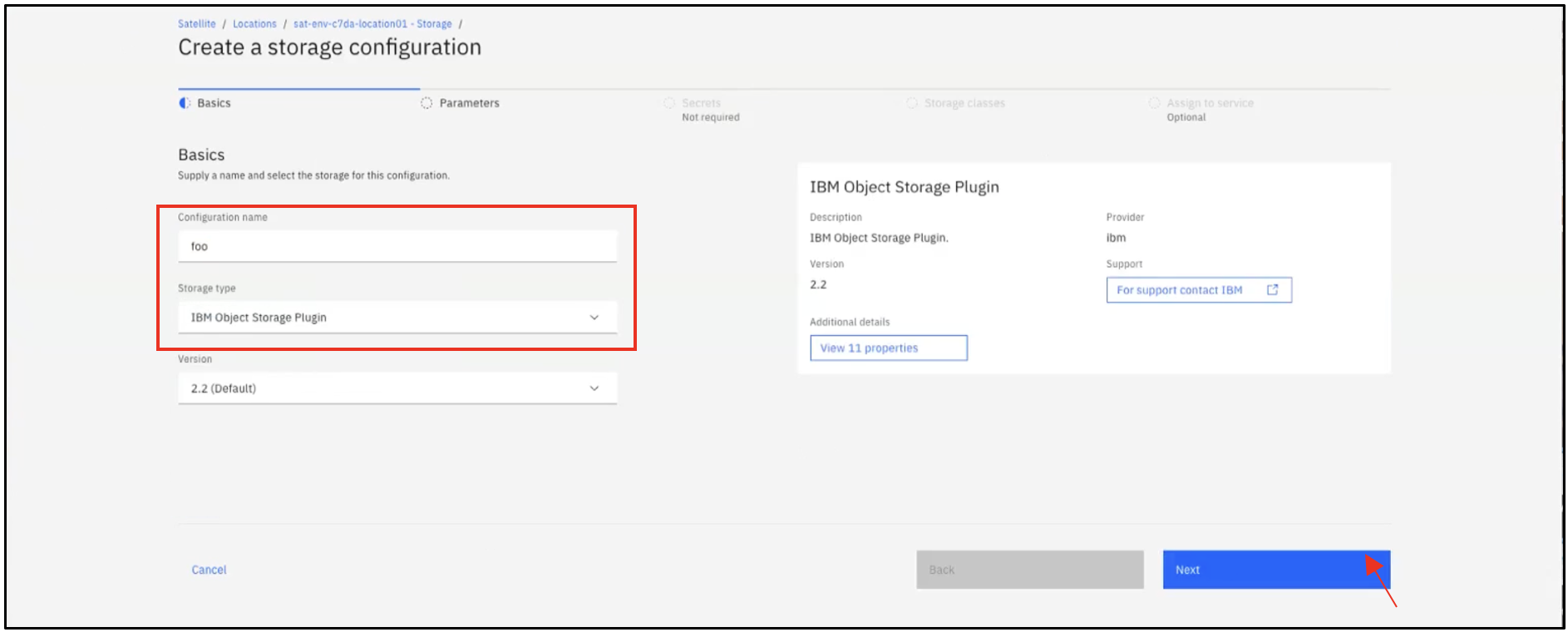

In the Basics section, provide a name for the configuration. Select IBM Object Storage Plugin as the Storage type in the drop-down. Click Next.

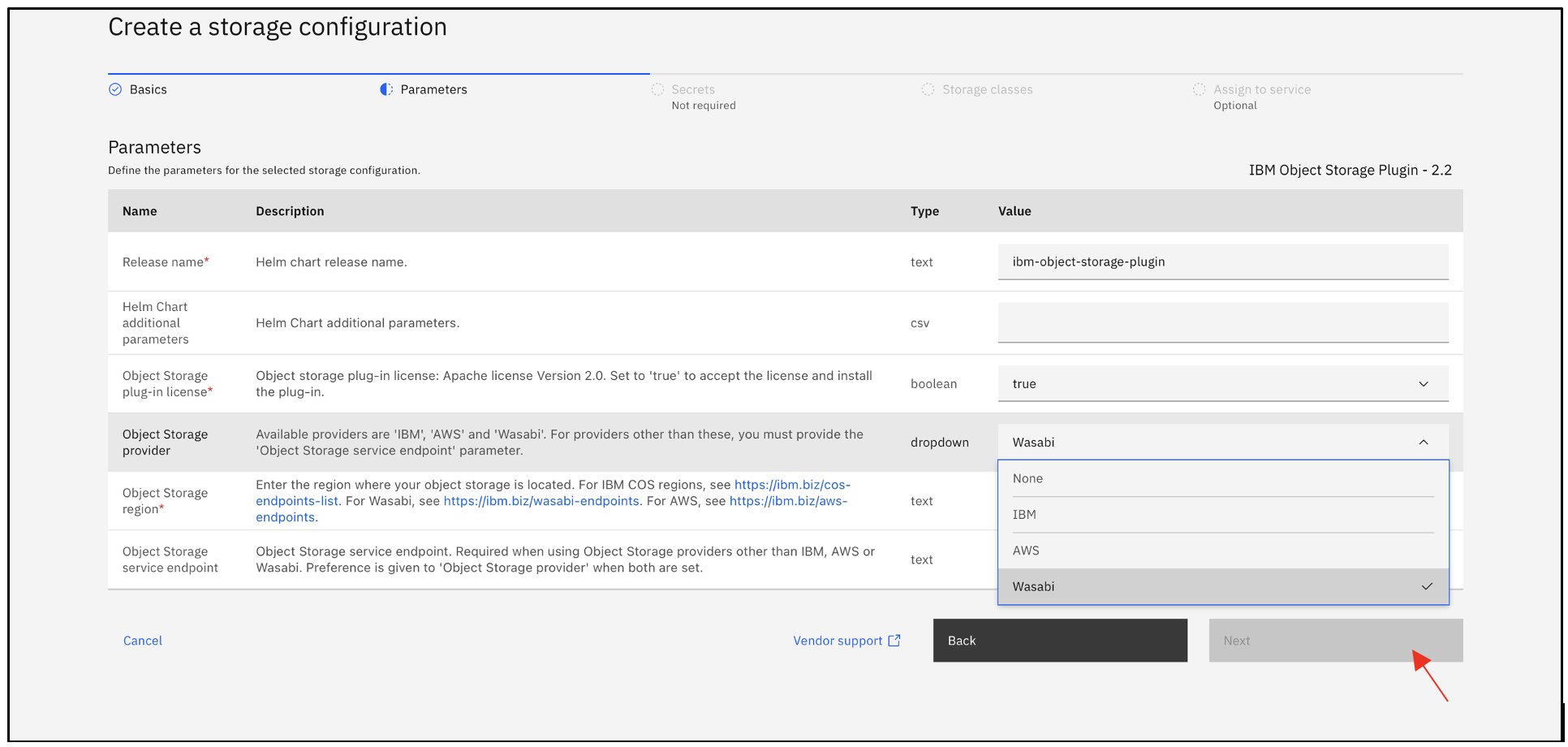

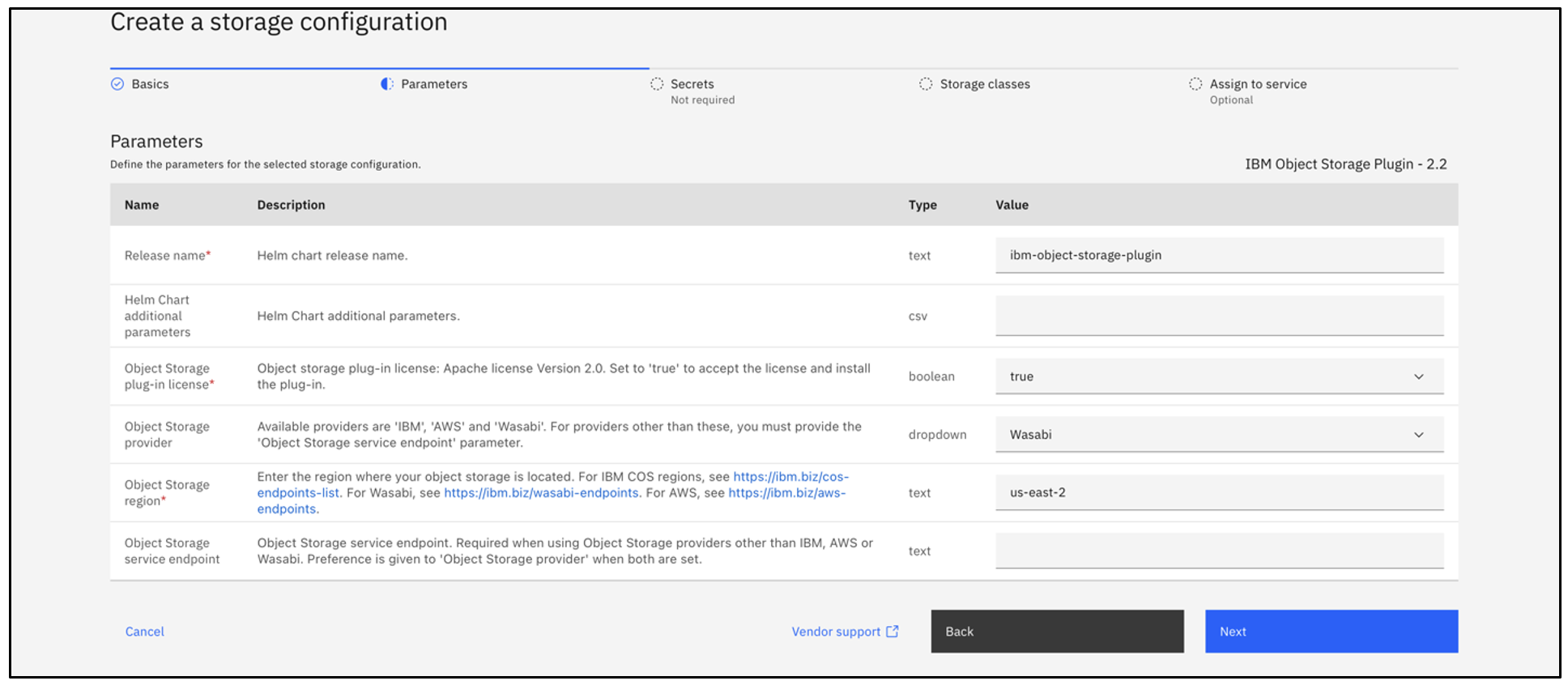

In the Parameters section, change the COS plugin license field to "true" to accept the Apache open-source license terms. Select Wasabi as the Object Storage Provider.

Provide the desired region in the Object Storage region and click Next.

This configuration example discusses the use of Wasabi's us-east-2 storage region. Provide the appropriate region for your configuration.

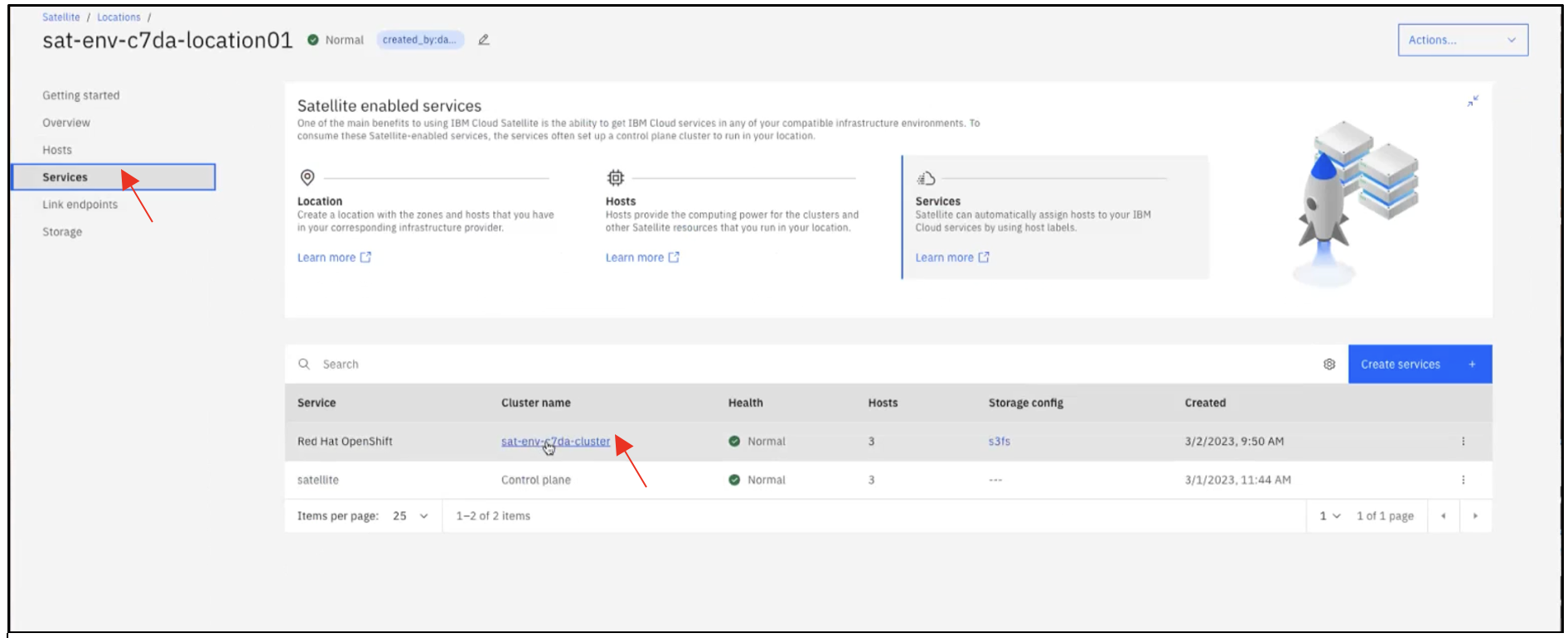

Click through to the Assignment tab and assign the storage template to the desired clusters.

The result will be the creation of storage classes within the assigned OpenShift clusters (which are running in our location in this example).

In the cluster(s), you also need to create a Kubernetes Secret with the associated Wasabi access credentials. We need to create a secret with two key-value pairs: `access-key` and `secret-key`, which contain the respective Wasabi credentials for the account to use.

Log into the OpenShift cluster running on the location by clicking on Services.

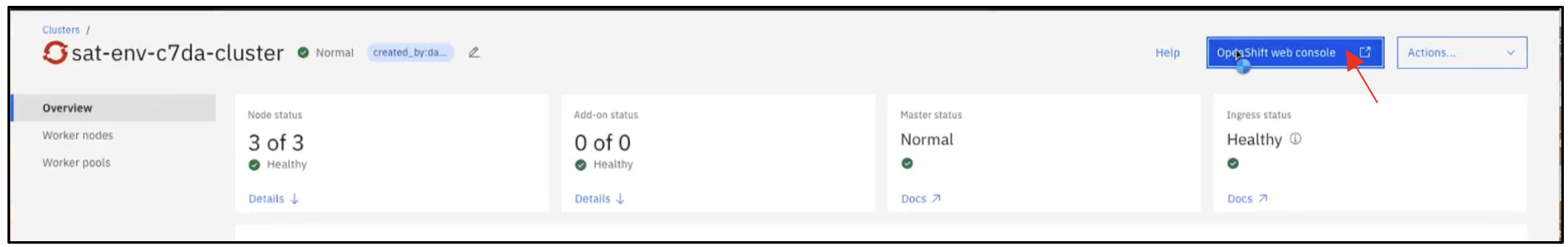

To log into the OpenShift web UI, click OpenShift web console.

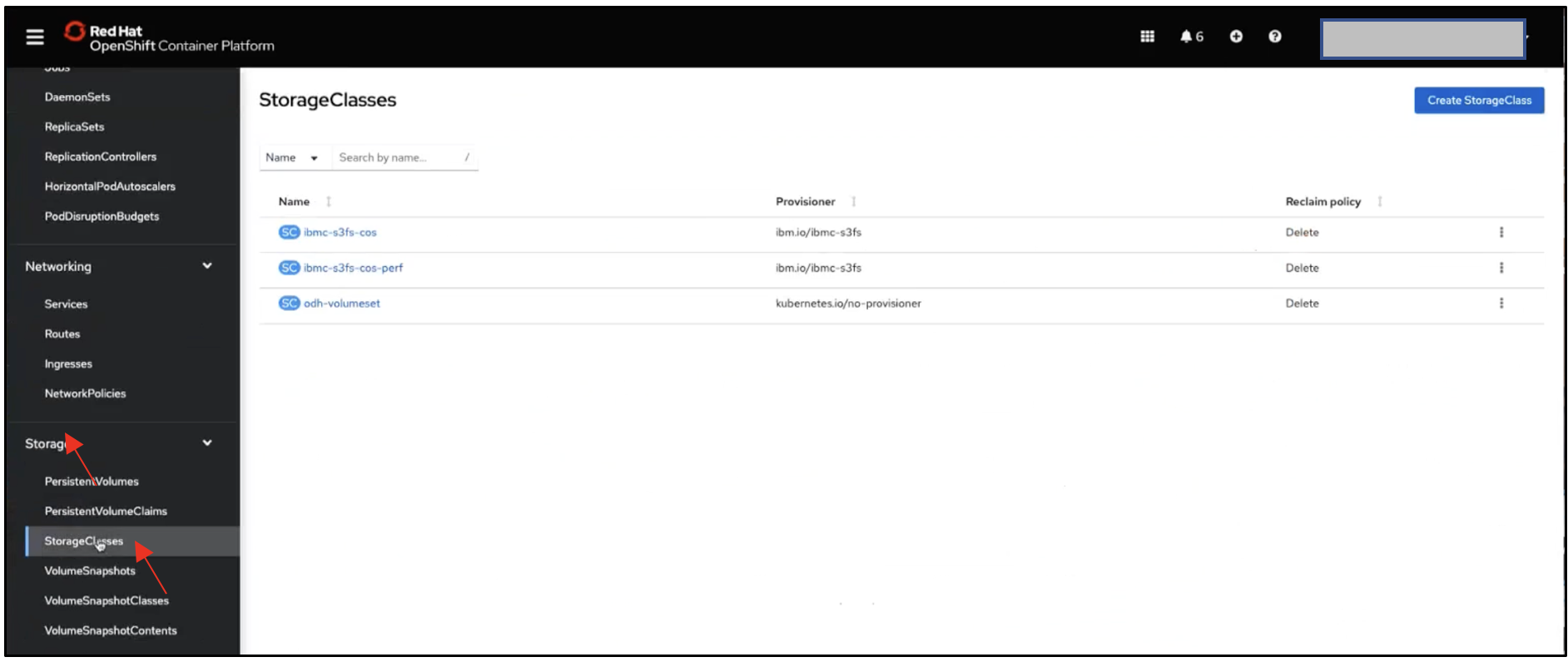

In the OpenShift console, navigate to Storage, then to StorageClasses on the left-hand pane.

These storageclassses are created by Satellite. We created a storage template for this location.

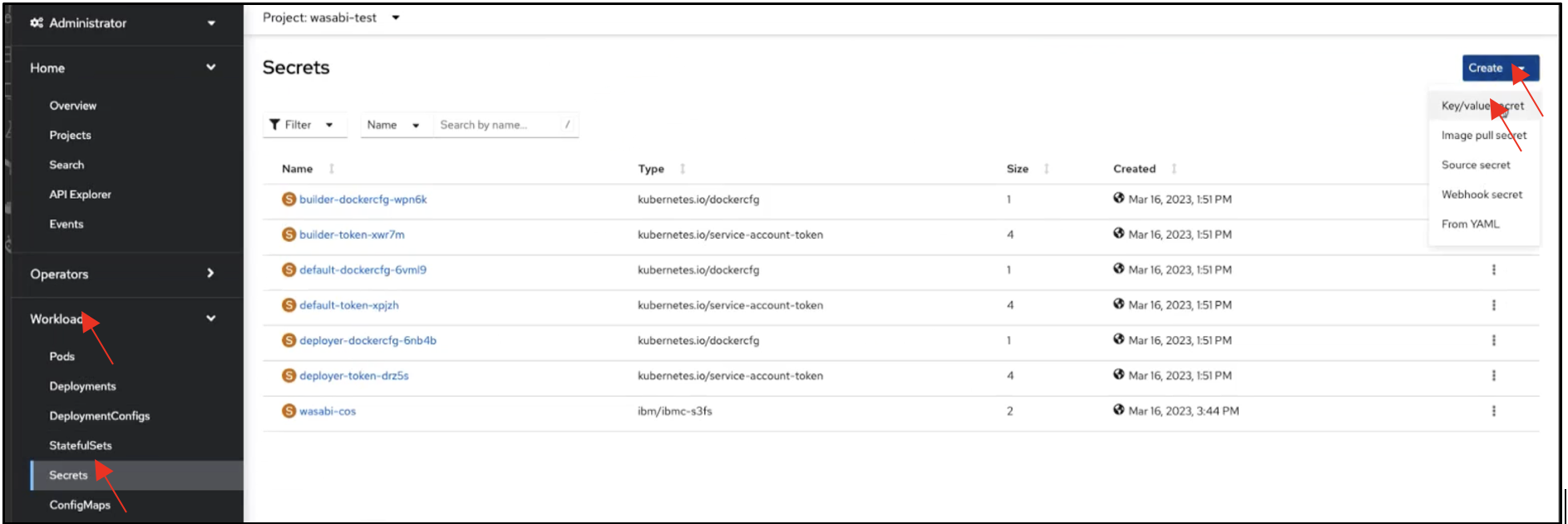

Navigate to Workloads, then to Secrets. Expand the Create drop-down and select Key/value secret.

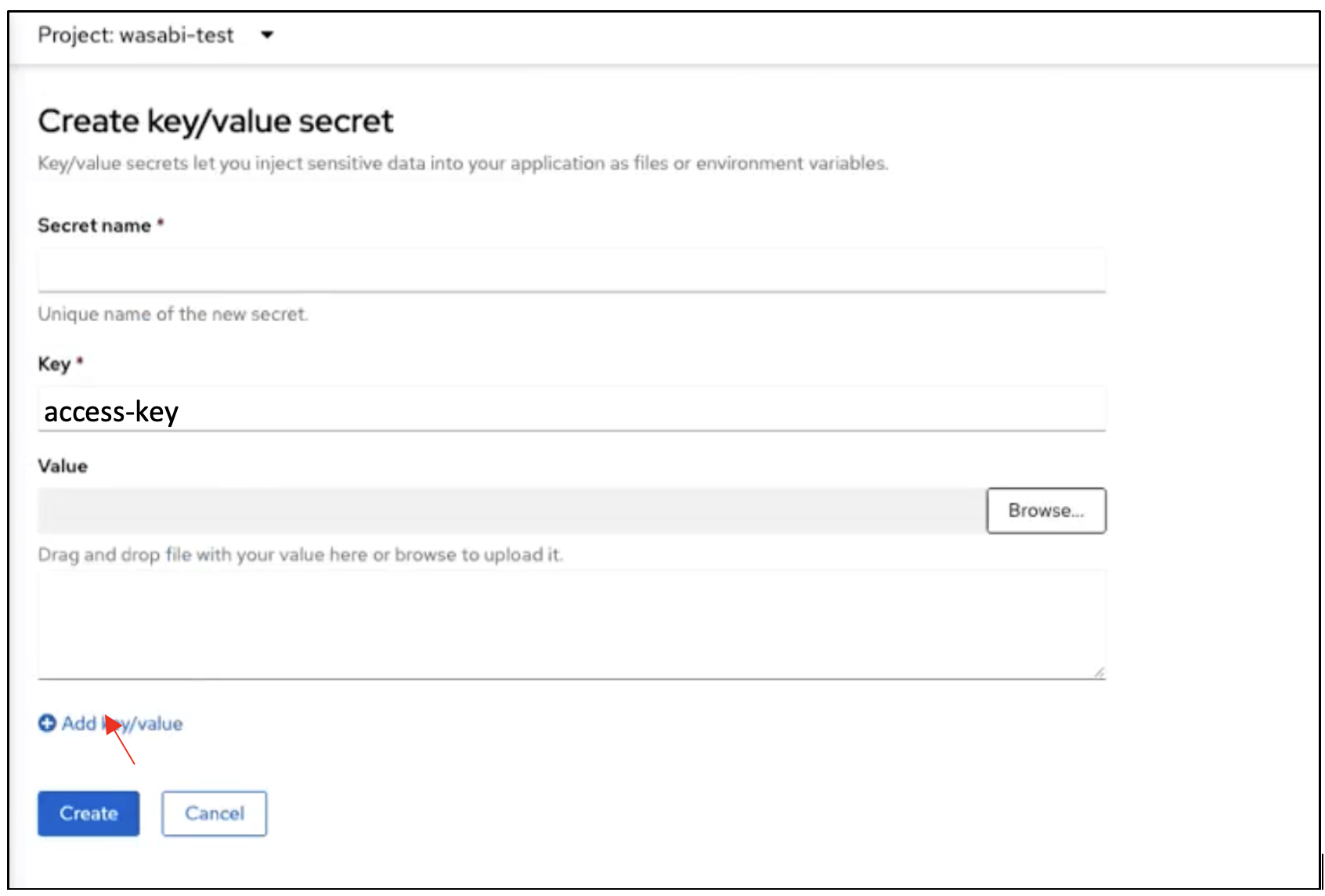

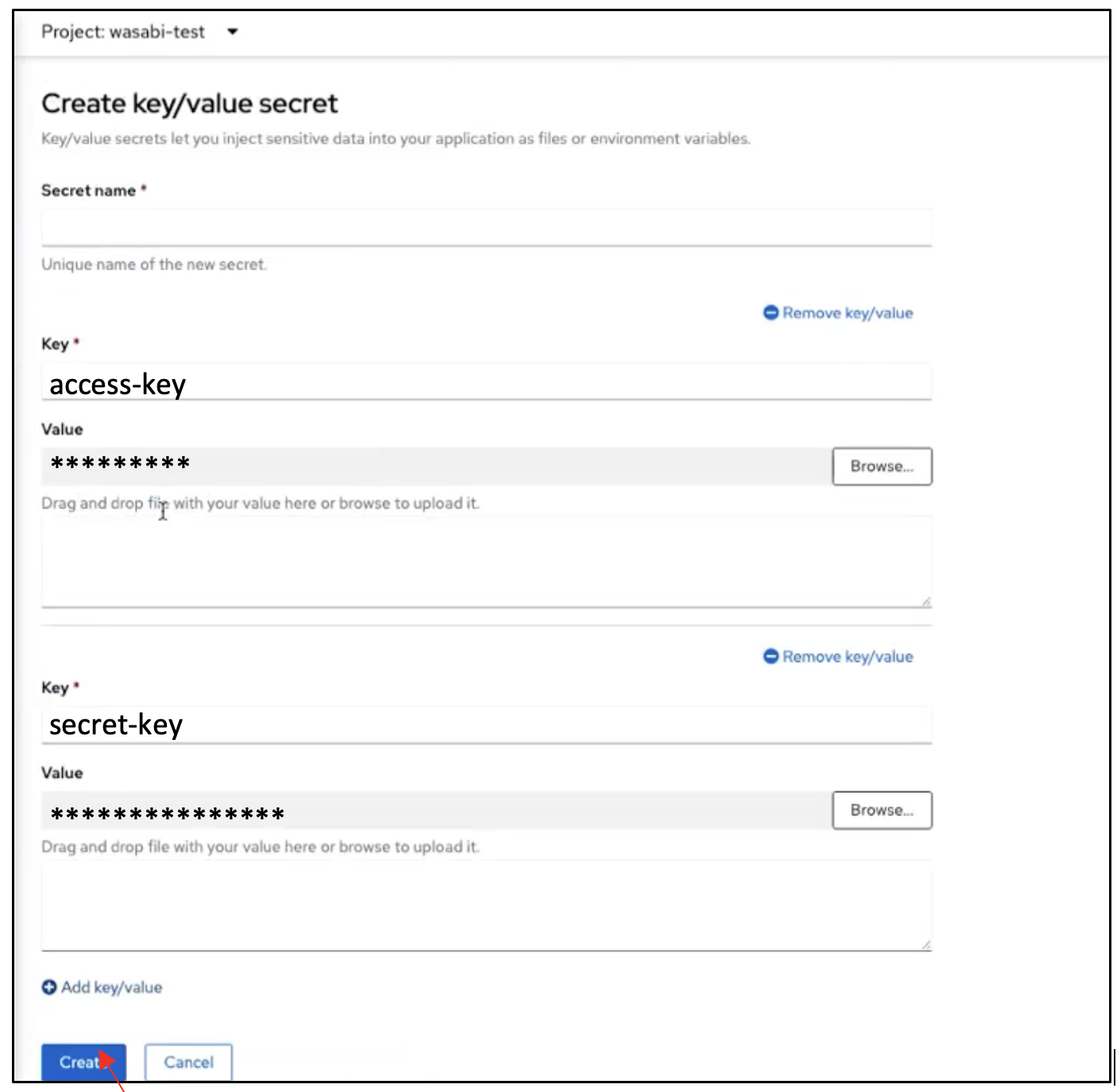

On the Create key/value secret page, provide a name for the secret in the Secret name field.

In the Key field, enter the term "access-key". In the Value field, enter the access key of your Wasabi account.

Click Add key/value to add the secret key.

In the Key field, enter the term "secret-key". In the Value field, enter the secret key of your Wasabi account. Click Create.

We now have a secret created for our OpenShift cluster.

Below is a prototypical PVC for a workload that wants to mount an S3 bucket into the filesystem:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: s3fs-test-pvc

namespace: wasabi-test

annotations:

volume.beta.kubernetes.io/storage-class: "ibmc-s3fs-cos"

ibm.io/auto-create-bucket: "true"

ibm.io/auto-delete-bucket: "false"

ibm.io/bucket: "davet-test-011"

ibm.io/object-path: "" # Bucket's sub-directory to be mounted (OPTIONAL)

ibm.io/endpoint: https://s3.wasabisys.com

ibm.io/region: "us-east-1"

ibm.io/secret-name: "wasabi-cos"

ibm.io/stat-cache-expire-seconds: "" # stat-cache-expire time in seconds; default is no expire

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi # fictitious valueBelow is an example pod specification that would use that PVC:

apiVersion: v1

kind: Pod

metadata:

name: s3fs-test-pod

namespace: wasabi-test

spec:

containers:

- name: s3fs-test-container

image: anaudiyal/infinite-loop

volumeMounts:

- mountPath: "/mnt/s3fs"

name: s3fs-test-volume

volumes:

- name: s3fs-test-volume

persistentVolumeClaim:

claimName: s3fs-test-pvcIn this example, the bucket `davet-test-011` in Wasabi is mapped to `/mnt/s3fs` (or, whatever path is specified). You can verify the filesystem mounting by inspecting the pod.

$ kubectl exec -it s3fs-test-pod -n bash

root@s3fs-test-pod:/#

root@s3fs-test-pod:/# df -Th | grep s3

s3fs fuse.s3fs 256T 0 256T 0% /mnt/s3fs

root@s3fs-test-pod:/# cd /mnt/s3fs/

root@s3fs-test-pod:/mnt/s3fs# ls

root@s3fs-test-pod:/mnt/s3fs#

root@s3fs-test-pod:/mnt/s3fs# echo "Sateliite and Wasabi integration" > sample.txt

root@s3fs-test-pod:/mnt/s3fs# ls

sample.txt

root@s3fs-test-pod:/mnt/s3fs# cat sample.txt

Sateliite and Wasabi integration

root@s3fs-test-pod:/mnt/s3fs#