How do I use CockroachDB with Wasabi?

CockroachDB (CRDB) has been validated for use with Wasabi as a cloud storage target for Backup and Restore of a cluster, database, or table.

CockroachDB is a Cloud-Native Distributed SQL Database designed to scale fast and survive disasters. CockroachDB natively supports S3-compatible Wasabi storage without any other product or service dependency.

Prerequisites

An active Wasabi cloud storage account.

CockroachDB versions 21.2 and later. Refer to Release Support Policy for the latest releases.

Architecture Diagram

The diagram below depicts the reference architecture for using Wasabi with CockroachDB.

Creating a Wasabi Bucket

Create a bucket in the Wasabi region of your choice for backing up the CockroachDB database. If the goal is to use Object Lock enabled buckets, refer to the Object Lock section, below. Once the bucket is created, create a user with the correct roles and policies, and download the Wasabi API access key set by following the steps in 3—Creating a User Account and Access Key. The user should have read/write access to the Wasabi bucket.

Creating Backup And Restore on CRDB

Users can create full or incremental backups of a cluster, database, or table.Taking regular backups of your data is an operational best practice.

The following use cases describe using Wasabi's cloud storage for CockroachDB bulk operations. Wasabi recommends enabling Object Lock on Wasabi to protect the validity of CockroachDB backups. For more detail on creating immutable backups, refer to Object Lock, below.

Disaster Recovery

Continual Full and/or Incremental Backups

Recovery points from a potential Catastrophic Failure

CockroachDB Backups are primarily needed for disaster recovery. Continually taking full and incremental CockroachDB backups create a backup collection in the target Wasabi bucket. The backups are stored at the collection URI which is passed in a BACKUP or RESTORE statement.

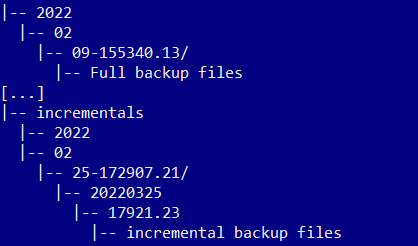

The following example depicts a backup collection created in a single Wasabi bucket.

A user with sufficient database backup or restore privileges and sufficient storage permissions can initiate/configure/schedule backup/restore operations via SQL statements using any of the supported database client interfaces or tools.

A user with sufficient database backup or restore privileges and sufficient storage permissions can initiate/configure/schedule backup/restore operations via SQL statements using any of the supported database client interfaces or tools.

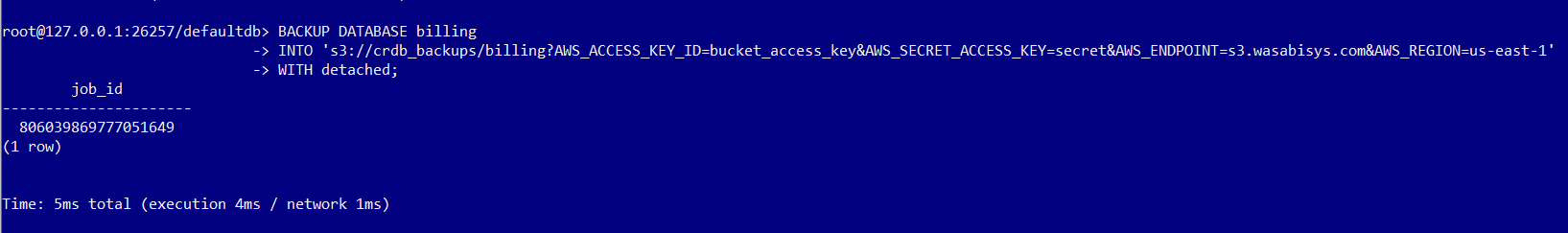

The below statement provides an example to issue a one-time full backup:

BACKUP DATABASE billing INTO 's3://crdb_backups/billing?AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1' WITH detached;The statement below provides an example to restore from a latest backup:

RESTORE FROM LATEST IN 's3://crdb_backups?AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1';Below is an example of a statement to restore from an incremental backup:

RESTORE FROM '2021/03/23-213101.37' IN 's3://crdb_backups?AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1';The above example discusses the use of the us-east-1 region. Refer to Service URLs for Wasabi's Storage Regions to use the correct values for AWS_ENDPOINT and AWS_REGION parameters based on the location of your bucket.

The S3_STORAGE_CLASS parameter in the URI does not apply to Wasabi since Wasabi offers a single storage class.

The example below shows a representation in CockroachDB's interactive SQL client.

CockroachDB Cluster Migration:

Forklift migration from On-Premise to CockroachCloud

Bulk Import PGDUMP or MYSQLDUMP

Successive backup-restores can be leveraged on the CockroachDB cluster to migrate the data and metadata to CockroachCloud using a Wasabi bucket as staging storage. For heterogeneous migrations, such as PostgreSQL or MySQL to CockroachDB, a temporary Wasabi storage bucket provides a cost-effective staging for data files for export-import style migrations. CockroachDB can be used to IMPORT data directly from a Wasabi bucket.

The following example shows importing a complete database (schema and data) from a PostgreSQL database dump.

IMPORT PGDUMP 's3://migration/pgdump.sql? AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1' WITH ignore_unsupported_statements;The below example shows how to import a single table from a MySQL database dump.

IMPORT TABLE customers FROM MYSQLDUMP 's3://migration/customers.sql? AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1' WITH skip_foreign_keys;The above example discusses the use of the us-east-1 region. Refer to Service URLs for Wasabi's Storage Regions to use the correct values for AWS_ENDPOINT and AWS_REGION parameters based on the location of your bucket.

The S3_STORAGE_CLASS parameter in the URI does not apply to Wasabi since Wasabi offers a single storage class.

CockroachDB Cluster Cloning

Create a Staging environment with Production data

Wasabi can be used to store backups of CockroachDB databases to run a cloning operation by restoring a recently taken full-backup. This procedure may be used for cluster cloning by leveraging a point-in-time copy of a production database backup. Refer to the below example to restore the latest backup.

RESTORE FROM LATEST IN 's3://crdb_backups?AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1';Staging Storage for Data Export-Import

Data exchanges in CockroachDB bulk data exports-imports require a staging area for the data files. A Wasabi storage bucket can be used as a staging area for CockroachDB exports and imports.

The below example shows the command for data export.

EXPORT INTO CSV 's3://staging/customers.csv? AWS_ACCESS_KEY_ID=bucket_access_key&AWS_SECRET_ACCESS_KEY=secret&AWS_ENDPOINT=s3.wasabisys.com&AWS_REGION=us-east-1' WITH delimiter = '|' FROM TABLE bank.customers;The above example discusses the use of the us-east-1 region. Refer to Service URLs for Wasabi's Storage Regions to use the correct values for AWS_ENDPOINT and AWS_REGION parameters based on the location of your bucket.

The S3_STORAGE_CLASS parameter in the URI does not apply to Wasabi since Wasabi offers a single storage class.

Object Lock

CockroachDB supports writing backups to Wasabi buckets with Object Lock enabled. This allows users to store database backups using a write-once-read-many (WORM) model.

The following steps describe the procedure to create Object Lock enabled buckets on the Wasabi console. To create object-locked buckets using S3-API, refer to the documentation on Wasabi S3 API Reference.

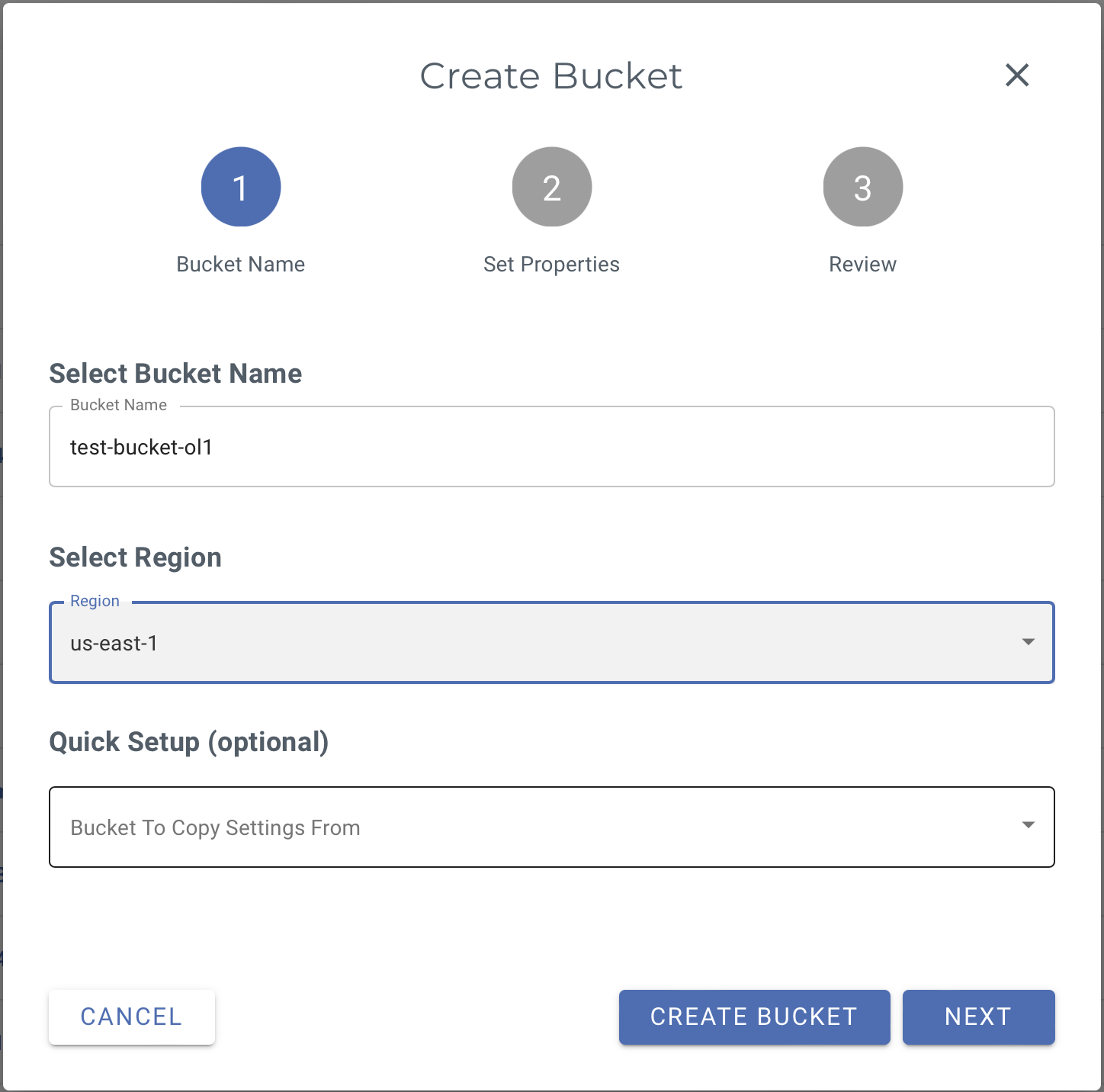

On the Wasabi console, click Create Bucket and input the Bucket Name and select the region. Click Next.

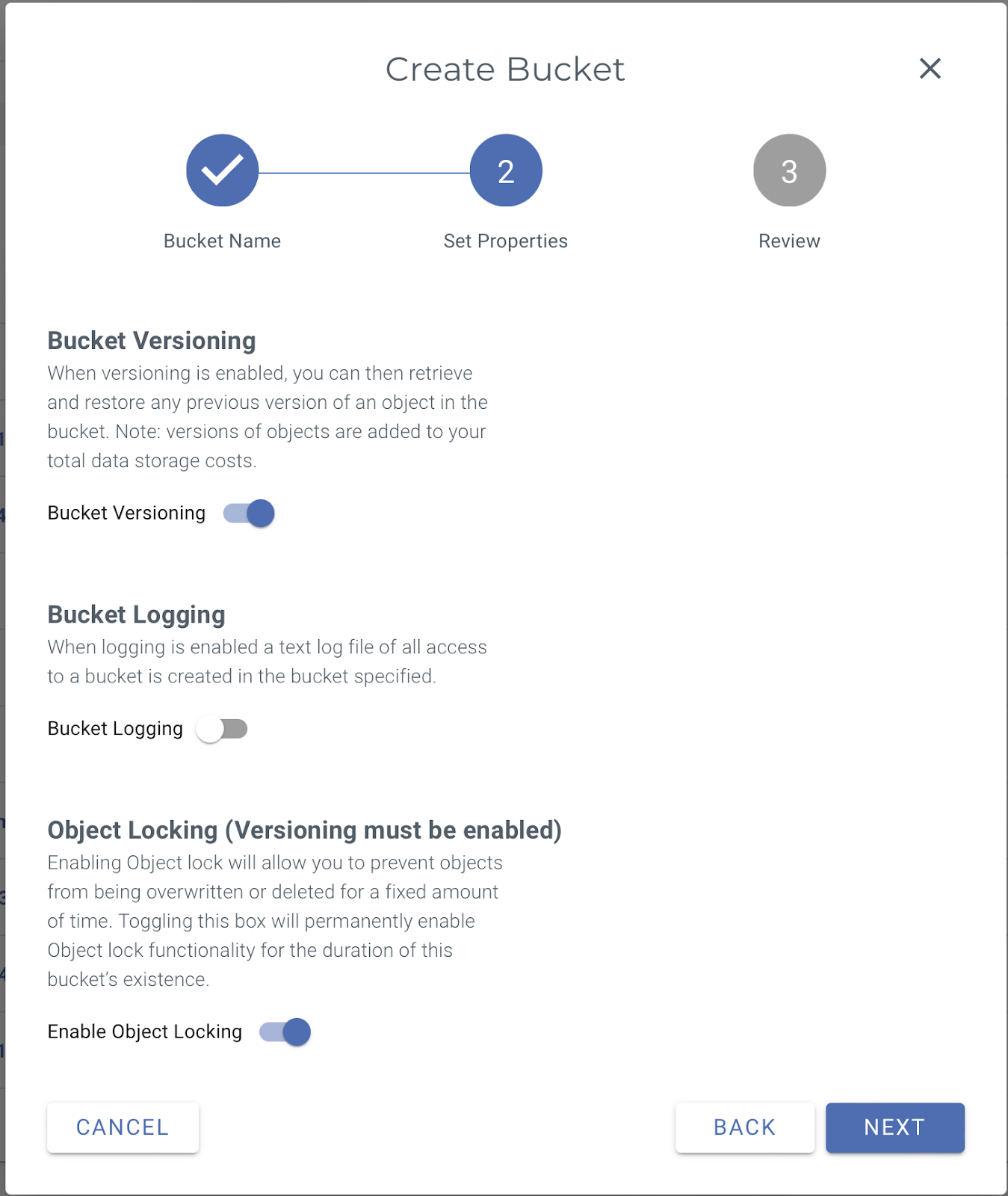

In the Set Properties window, enable versioning by setting Bucket Versioning and enable Object Locking by setting Enable Object Locking. Then click Next and then review the details and click Create Bucket to create the bucket.

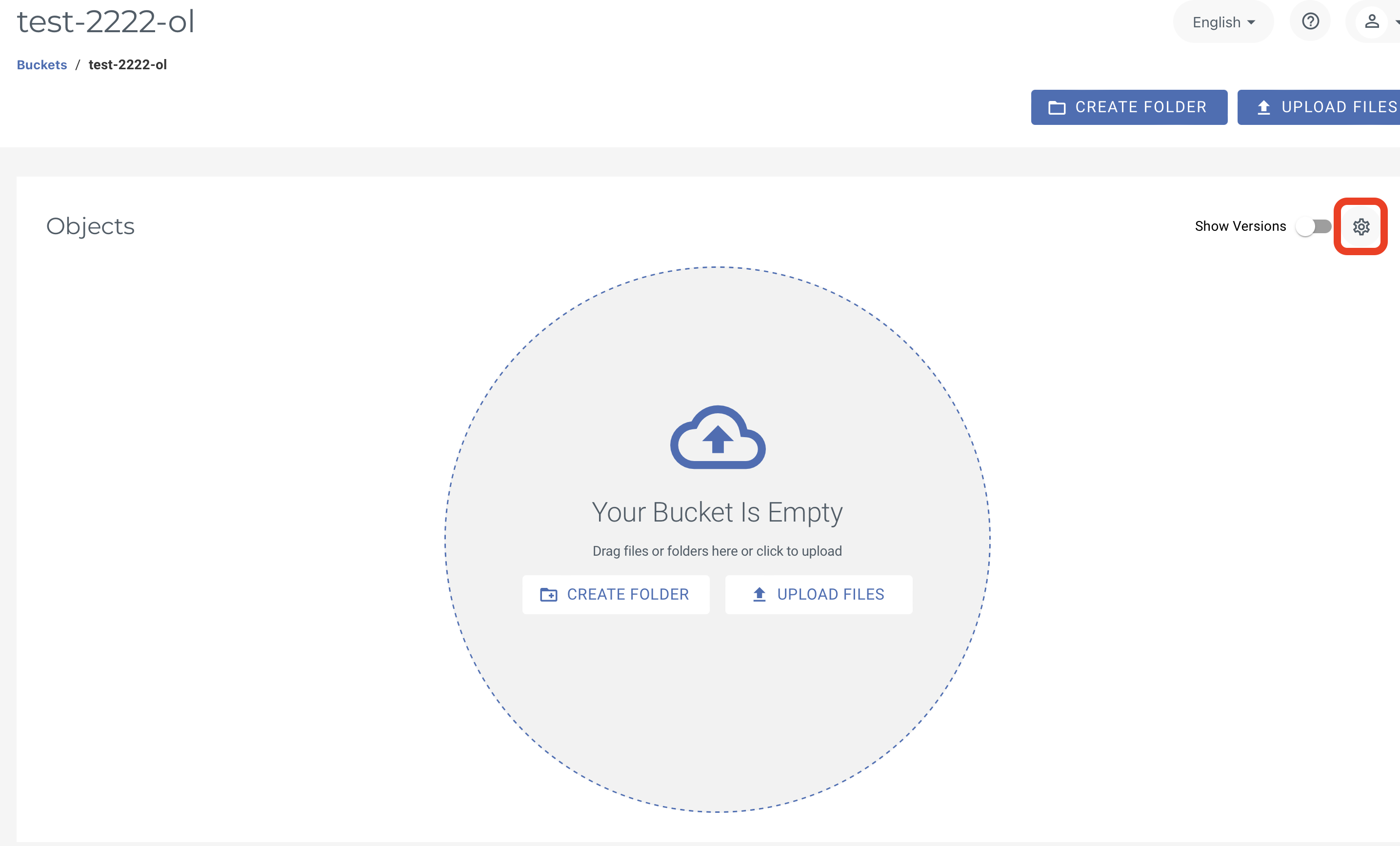

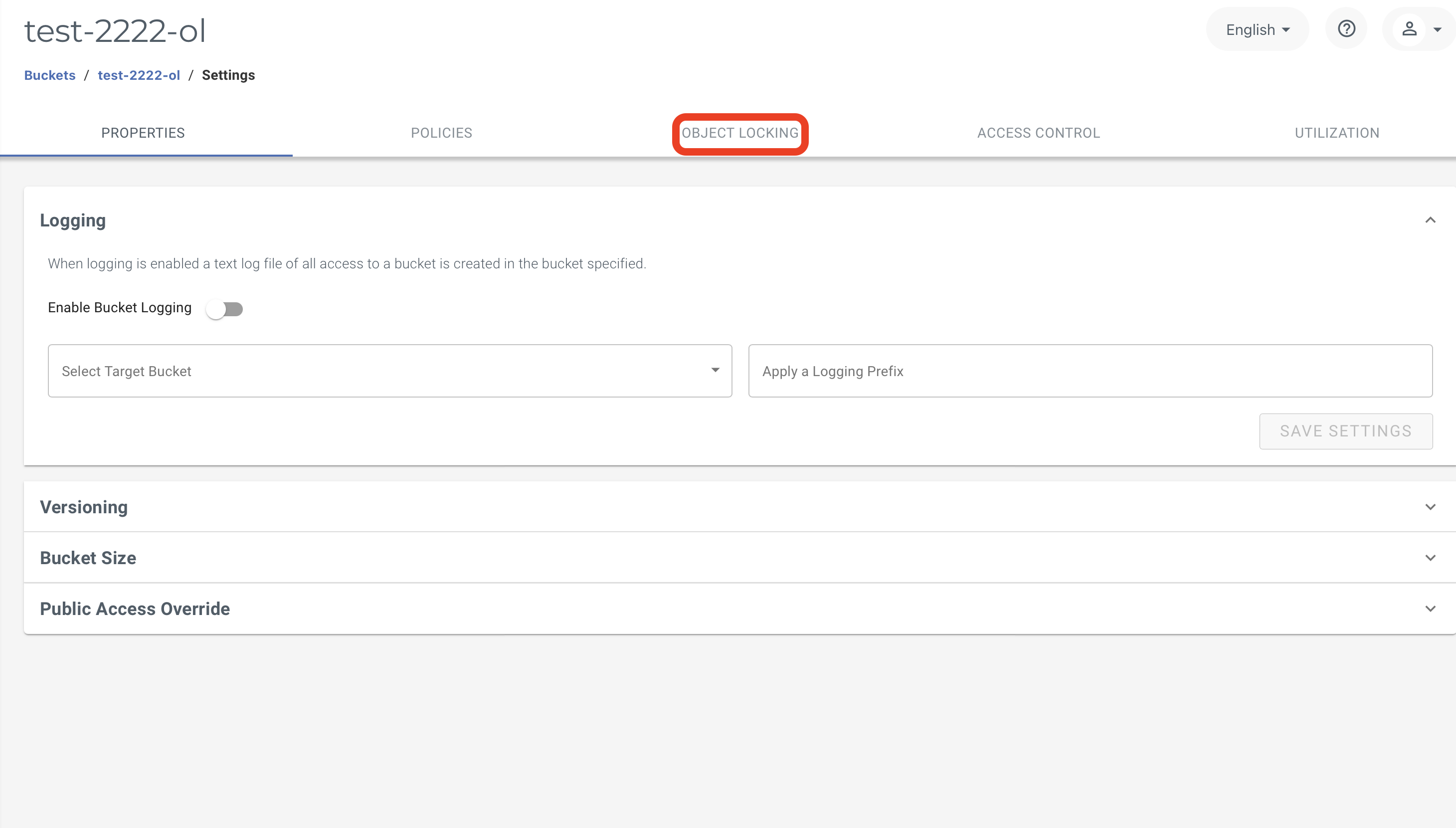

Once the bucket is created, select the bucket and click on Settings.

On the settings page, click Object Locking.

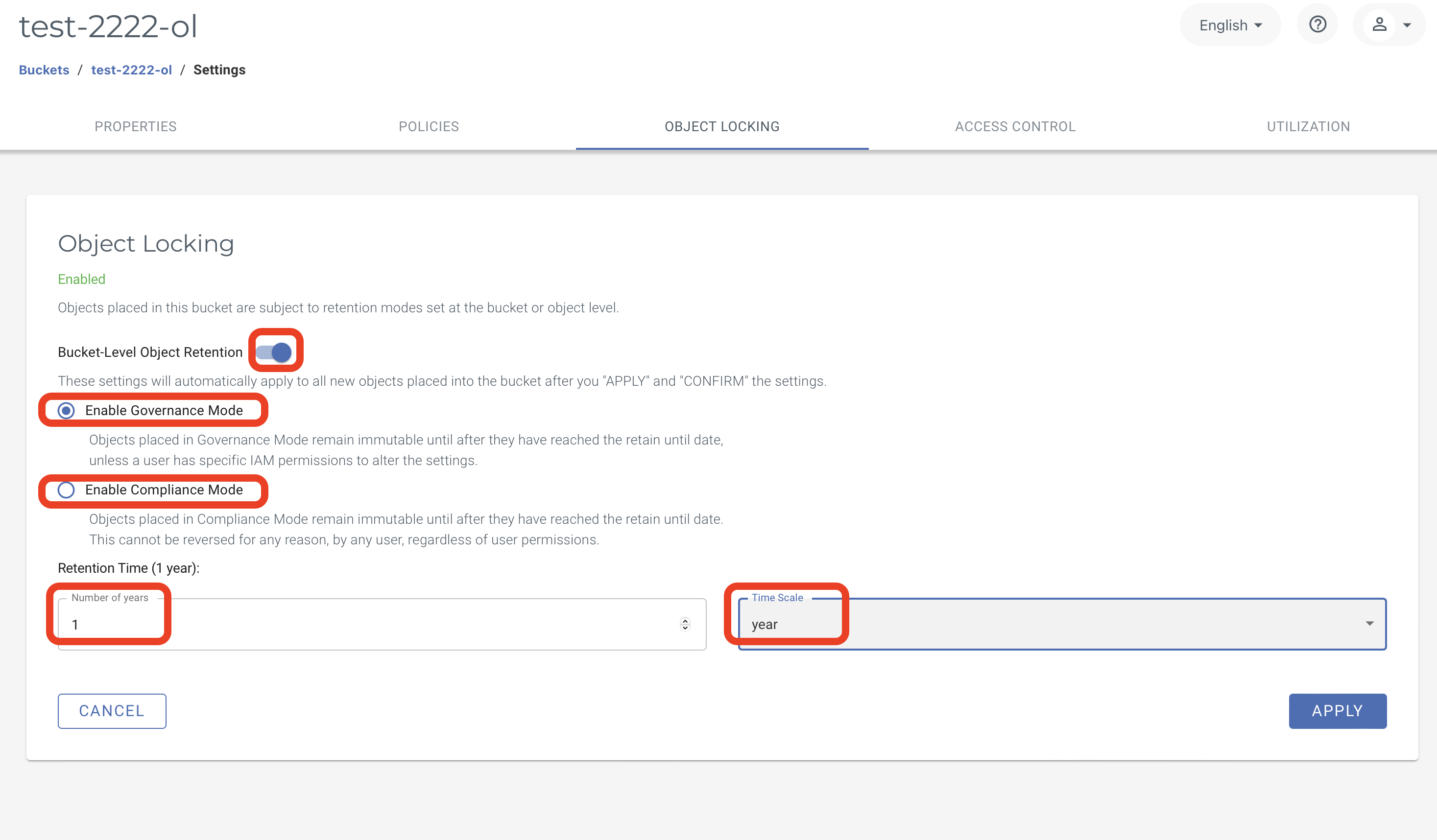

In the Object Locking tab, enable Bucket-Level Object Retention. For Governance mode select Enable Governance Mode. To enable Compliance mode, select Enable Compliance Mode. Set the retention time based on the customer’s retention policies for preserving backups.

When defining the retention time for the bucket, customer requirements as well as the size of the database need to be considered. Once backups are uploaded to the bucket, they cannot be deleted or modified until after the retention period has expired and will incur a storage charge.

Once the Object Lock enabled bucket is created, follow the steps in the Backup and Restore on CRDB section to create immutable backups.